AWS Certified DevOps Engineer - Professional

Last Update Mar 1, 2026

Total Questions : 419

We are offering FREE DOP-C02 Amazon Web Services exam questions. All you do is to just go and sign up. Give your details, prepare DOP-C02 free exam questions and then go for complete pool of AWS Certified DevOps Engineer - Professional test questions that will help you more.

A company frequently creates Docker images stored in Amazon ECR, with both tagged and untagged versions. The company wants to delete stale or unused images while keeping a minimum count.

Which solution meets this requirement?

A company uses the AWS Cloud Development Kit (AWS CDK) to define its application. The company uses a pipeline that consists of AWS CodePipeline and AWS CodeBuild to deploy the CDK application. The company wants to introduce unit tests to the pipeline to test various infrastructure components. The company wants to ensure that a deployment proceeds if no unit tests result in a failure.

Which combination of steps will enforce the testing requirement in the pipeline? (Select TWO.)

A DevOps engineer needs to implement a CI/CD pipeline in an AWS account. The pipeline must consume sensitive database credentials that are stored in an AWS Systems Manager Parameter Store parameter. The Parameter Store parameter is in a separate central account. The DevOps engineer needs to create and integrate the parameter with the CI/CD account.

Which combination of steps will meet these requirements? (Select THREE.)

A company requires its developers to tag all Amazon Elastic Block Store (Amazon EBS) volumes in an account to indicate a desired backup frequency. This requirement Includes EBS volumes that do not require backups. The company uses custom tags named Backup_Frequency that have values of none, dally, or weekly that correspond to the desired backup frequency. An audit finds that developers are occasionally not tagging the EBS volumes.

A DevOps engineer needs to ensure that all EBS volumes always have the Backup_Frequency tag so that the company can perform backups at least weekly unless a different value is specified.

Which solution will meet these requirements?

A company wants to use a grid system for a proprietary enterprise m-memory data store on top of AWS. This system can run in multiple server nodes in any Linux-based distribution. The system must be able to reconfigure the entire cluster every time a node is added or removed. When adding or removing nodes an /etc./cluster/nodes config file must be updated listing the IP addresses of the current node members of that cluster.

The company wants to automate the task of adding new nodes to a cluster.

What can a DevOps engineer do to meet these requirements?

A company uses an Amazon Elastic Kubernetes Service (Amazon EKS) cluster to host its machine learning (ML) application. As the ML model and the container image size grow, the time that new pods take to start up has increased to several minutes.

A DevOps engineer needs to reduce the startup time to seconds. The solution must also reduce the startup time to seconds when the pod runs on nodes that were recently added to the cluster.

The DevOps engineer creates an Amazon EventBridge rule that invokes an automation in AWS Systems Manager. The automation prefetches the container images from an Amazon Elastic Container Registry (Amazon ECR) repository when new images are pushed to the repository. The DevOps engineer also configures tags to be applied to the cluster and the node groups.

What should the DevOps engineer do next to meet the requirements?

A company's DevOps engineer uses AWS Systems Manager to perform maintenance tasks during maintenance windows. The company has a few Amazon EC2 instances that require a restart after notifications from AWS Health. The DevOps engineer needs to implement an automated solution to remediate these notifications. The DevOps engineer creates an Amazon EventBridge rule.

How should the DevOps engineer configure the EventBridge rule to meet these requirements?

A company uses Amazon Elastic Container Registry (Amazon ECR) for all images of the company's containerized infrastructure. The company uses the pull through cache functionality with the /external prefix to avoid throttling when the company retrieves images from external image registries. The company uses AWS Organizations for its accounts.

Every image in the registry must be encrypted with a specific, pre-provisioned AWS Key Management Service (AWS KMS) key. The company's internally created images already comply with this policy. However, cached external images use server-side encryption with Amazon S3 managed keys (SSE-S3).

The company must remove the noncompliant cache repositories. The company must also implement a secure solution to ensure that all new pull through cache repositories are automatically encrypted with the required KMS key.

Which solution will meet these requirements?

A company is migrating its web application to AWS. The application uses WebSocket connections for real-time updates and requires sticky sessions.

A DevOps engineer must implement a highly available architecture for the application. The application must be accessible to users worldwide with the least possible latency.

Which solution will meet these requirements with the LEAST operational overhead?

An online retail company based in the United States plans to expand its operations to Europe and Asia in the next six months. Its product currently runs on Amazon EC2 instances behind an Application Load Balancer. The instances run in an Amazon EC2 Auto Scaling group across multiple Availability Zones. All data is stored in an Amazon Aurora database instance.

When the product is deployed in multiple regions, the company wants a single product catalog across all regions, but for compliance purposes, its customer information and purchases must be kept in each region.

How should the company meet these requirements with the LEAST amount of application changes?

A company runs applications on Windows and Linux Amazon EC2 instances The instances run across multiple Availability Zones In an AWS Region. The company uses Auto Scaling groups for each application.

The company needs a durable storage solution for the instances. The solution must use SMB for Windows and must use NFS for Linux. The solution must also have sub-millisecond latencies. All instances will read and write the data.

Which combination of steps will meet these requirements? (Select THREE.)

A company's application teams use AWS CodeCommit repositories for their applications. The application teams have repositories in multiple AWS

accounts. All accounts are in an organization in AWS Organizations.

Each application team uses AWS IAM Identity Center (AWS Single Sign-On) configured with an external IdP to assume a developer IAM role. The developer role allows the application teams to use Git to work with the code in the repositories.

A security audit reveals that the application teams can modify the main branch in any repository. A DevOps engineer must implement a solution that

allows the application teams to modify the main branch of only the repositories that they manage.

Which combination of steps will meet these requirements? (Select THREE.)

A company recently deployed its web application on AWS. The company is preparing for a large-scale sales event and must ensure that the web application can scale to meet the demand

The application's frontend infrastructure includes an Amazon CloudFront distribution that has an Amazon S3 bucket as an origin. The backend infrastructure includes an Amazon API Gateway API. several AWS Lambda functions, and an Amazon Aurora DB cluster

The company's DevOps engineer conducts a load test and identifies that the Lambda functions can fulfill the peak number of requests However, the DevOps engineer notices request latency during the initial burst of requests Most of the requests to the Lambda functions produce queries to the database A large portion of the invocation time is used to establish database connections

Which combination of steps will provide the application with the required scalability? (Select TWO)

A company uses AWS CodeArtifact to centrally store Python packages. The CodeArtifact repository is configured with the following repository policy.

"Version": ”2012-10-17”,

"Statement”: [

{

"Action": [

"codeartifact:DescribePackageVersion", "codeartifact:DescribeRepository",

"codeartifact:GetPackageVersionReadme", "codeartifact:GetRepositoryEndpoint", "codeartifact:ListPackageVersionAssets", '’codeartifact: ListPackageVersionDependencies", "codeartifact:ListPackageVersions", '’codeartifact :ListPackages",

'’codeartifact: ReadFromRepository"

],

"Effect": "Allow",

"Resource": "*",

"Principal":

"Condition": {

"StringEquals": {

"aws:PrincipalOrglD": [ "o-xxxxxxxxxxx"

]

}

}

}

]

A development team is building a new project in an account that is in an organization in AWS Organizations. The development team wants to use a Python library that has already been stored in the CodeArtifact repository in the organization. The development team uses AWS CodePipeline and AWS CodeBuild to build the new application. The CodeBuild job that the development team uses to build the application is configured to run in a VPC Because of compliance requirements the VPC has no internet connectivity.

The development team creates the VPC endpoints for CodeArtifact and updates the CodeBuild buildspec yaml file. However, the development team cannot download the Python library from the repository.

Which combination of steps should a DevOps engineer take so that the development team can use Code Artifact? (Select TWO.)

A company uses an organization in AWS Organizations to manage its AWS accounts. The company recently acquired another company that has standalone AWS accounts. The acquiring company's DevOps team needs to consolidate the administration of the AWS accounts for both companies and retain full administrative control of the accounts. The DevOps team also needs to collect and group findings across all the accounts to implement and maintain a security posture.

Which combination of steps should the DevOps team take to meet these requirements? (Select TWO.)

A company wants to use AWS development tools to replace its current bash deployment scripts. The company currently deploys a LAMP application to a group of Amazon EC2 instances behind an Application Load Balancer (ALB). During the deployments, the company unit tests the committed application, stops and starts services, unregisters and re-registers instances with the load balancer, and updates file permissions. The company wants to maintain the same deployment functionality through the shift to using AWS services.

Which solution will meet these requirements?

A company is divided into teams Each team has an AWS account and all the accounts are in an organization in AWS Organizations. Each team must retain full administrative rights to its AWS account. Each team also must be allowed to access only AWS services that the company approves for use AWS services must gam approval through a request and approval process.

How should a DevOps engineer configure the accounts to meet these requirements?

A company has a continuous integration pipeline where the company creates container images by using AWS CodeBuild. The created images are stored in Amazon Elastic Container Registry (Amazon ECR). Checking for and fixing the vulnerabilities in the images takes the company too much time. The company wants to identify the image vulnerabilities quickly and notify the security team of the vulnerabilities. Which combination of steps will meet these requirements with the LEAST operational overhead? (Select TWO.)

A company has developed a web application that conducts seasonal sales on public holidays. The web application is deployed on AWS and uses AWS services for storage, database, computing, and encryption. During seasonal sales, the company expects high network traffic from many users. The company must receive insights regarding any unexpected behavior during the sale. A DevOps team wants to review the insights upon detecting anomalous behaviors during the sale. The DevOps team wants to receive recommended actions to resolve the anomalous behaviors. The recommendations must be provided on the provisioned infrastructure to address issues that might occur in the future.

Which combination of steps will meet these requirements with the LEAST operational overhead? (Select TWO.)

A company manages an application that stores logs in Amazon CloudWatch Logs. The company wants to archive the logs to an Amazon S3 bucket. Logs are rarely accessed after 90 days and must be retained for 10 years.

Which combination of steps should a DevOps engineer take to meet these requirements? (Select TWO.)

A company has an application that runs on Amazon EC2 instances behind an Application Load Balancer (ALB) The EC2 Instances are in multiple Availability Zones The application was misconfigured in a single Availability Zone, which caused a partial outage of the application.

A DevOps engineer made changes to ensure that the unhealthy EC2 instances in one Availability Zone do not affect the healthy EC2 instances in the other Availability Zones. The DevOps engineer needs to test the application's failover and shift where the ALB sends traffic During failover. the ALB must avoid sending traffic to the Availability Zone where the failure has occurred.

Which solution will meet these requirements?

A company uses AWS Organizations, AWS Control Tower, AWS Config, and Terraform to manage its AWS accounts and resources. The company must ensure that users deploy only AWS Lambda functions that are connected to a VPC in member AWS accounts.

Which solution will meet these requirements with the LEAST operational effort?

A company runs an application with an Amazon EC2 and on-premises configuration. A DevOps engineer needs to standardize patching across both environments. Company policy dictates that patching only happens during non-business hours.

Which combination of actions will meet these requirements? (Choose three.)

An IT team has built an AWS CloudFormation template so others in the company can quickly and reliably deploy and terminate an application. The template creates an Amazon EC2 instance with a user data script to install the application and an Amazon S3 bucket that the application uses to serve static webpages while it is running.

All resources should be removed when the CloudFormation stack is deleted. However, the team observes that CloudFormation reports an error during stack deletion, and the S3 bucket created by the stack is not deleted.

How can the team resolve the error in the MOST efficient manner to ensure that all resources are deleted without errors?

A company has deployed an Amazon Elastic Kubernetes Service (Amazon EKS) cluster with Amazon EC2 node groups. The company's DevOps team uses the Kubernetes Horizontal Pod Autoscaler and recently installed a supported EKS cluster Autoscaler.

The DevOps team needs to implement a solution to collect metrics and logs of the EKS cluster to establish a baseline for performance. The DevOps team will create an initial set of thresholds for specific metrics and will update the thresholds over time as the cluster is used. The DevOps team must receive an Amazon Simple Notification Service (Amazon SNS) email notification if the initial set of thresholds is exceeded or if the EKS cluster Autoscaler is not functioning properly.

The solution must collect cluster, node, and pod metrics. The solution also must capture logs in Amazon CloudWatch.

Which combination of steps should the DevOps team take to meet these requirements? (Select THREE.)

A DevOps engineer needs to implement integration tests into an existing AWS CodePipelme CI/CD workflow for an Amazon Elastic Container Service (Amazon ECS) service. The CI/CD workflow retrieves new application code from an AWS CodeCommit repository and builds a container image. The CI/CD workflow then uploads the container image to Amazon Elastic Container Registry (Amazon ECR) with a new image tag version.

The integration tests must ensure that new versions of the service endpoint are reachable and that vanous API methods return successful response data The DevOps engineer has already created an ECS cluster to test the service

Which combination of steps will meet these requirements with the LEAST management overhead? (Select THREE.)

A company uses an organization in AWS Organizations to manage multiple AWS accounts in a hierarchical structure. An SCP that is associated with the organization root allows IAM users to be created.

A DevOps team must be able to create IAM users with any level of permissions. Developers must also be able to create IAM users. However, developers must not be able to grant new IAM users excessive permissions. The developers have the CreateAndManageUsers role in each account. The DevOps team must be able to prevent other users from creating IAM users.

Which combination of steps will meet these requirements? (Select TWO.)

A security review has identified that an AWS CodeBuild project is downloading a database population script from an Amazon S3 bucket using an unauthenticated request. The security team does not allow unauthenticated requests to S3 buckets for this project.

How can this issue be corrected in the MOST secure manner?

A company is hosting a web application in an AWS Region. For disaster recovery purposes, a second region is being used as a standby. Disaster recovery requirements state that session data must be replicated between regions in near-real time and 1% of requests should route to the secondary region to continuously verify system functionality. Additionally, if there is a disruption in service in the main region, traffic should be automatically routed to the secondary region, and the secondary region must be able to scale up to handle all traffic.

How should a DevOps engineer meet these requirements?

A company manages AWS accounts for application teams in AWS Control Tower. Individual application teams are responsible for securing their respective AWS accounts.

A DevOps engineer needs to enable Amazon GuardDuty for all AWS accounts in which the application teams have not already enabled GuardDuty. The DevOps engineer is using AWS CloudFormation StackSets from the AWS Control Tower management account.

How should the DevOps engineer configure the CloudFormation template to prevent failure during the StackSets deployment?

A company builds a container image in an AWS CodeBuild project by running Docker commands. After the container image is built, the CodeBuild project uploads the container image to an Amazon S3 bucket. The CodeBuild project has an 1AM service role that has permissions to access the S3 bucket.

A DevOps engineer needs to replace the S3 bucket with an Amazon Elastic Container Registry (Amazon ECR) repository to store the container images. The DevOps engineer creates an ECR private image repository in the same AWS Region of the CodeBuild project. The DevOps engineer adjusts the 1AM service role with the permissions that are necessary to work with the new ECR repository. The DevOps engineer also places new repository information into the docker build command and the docker push command that are used in the buildspec.yml file.

When the CodeBuild project runs a build job, the job fails when the job tries to access the ECR repository.

Which solution will resolve the issue of failed access to the ECR repository?

A company uses an AWS CodeArtifact repository to store Python packages that the company developed internally. A DevOps engineer needs to use AWS CodeDeploy to deploy an application to an Amazon EC2 instance. The application uses a Python package that is stored in the CodeArtifact repository. A BeforeInstall lifecycle event hook will install the package.

The DevOps engineer needs to grant the EC2 instance access to the CodeArtifact repository.

Which solution will meet this requirement?

A company uses a pipeline in AWS CodePipeline to upload AWS CloudFormation templates to an Amazon S3 bucket. The pipeline uses the templates to deploy CloudFormation stacks that match the names of the templates.

The company has experienced issues when it tries to revert templates to a previous version. To prevent these issues, the company must have the ability to review template modifications before the modifications are deployed to production.

Which solution will meet these requirements with the LEAST operational overhead?

A company is developing a web application that runs on Amazon EC2 Linux instances. The application requires monitoring of custom performance metrics. The company must collect metrics for API response times and database query latency across multiple instances. Which solution will generate the custom metrics with the LEAST operational overhead?

A DevOps engineer has automated a web service deployment by using AWS CodePipeline with the following steps:

1) An AWS CodeBuild project compiles the deployment artifact and runs unit tests.

2) An AWS CodeDeploy deployment group deploys the web service to Amazon EC2 instances in the staging environment.

3) A CodeDeploy deployment group deploys the web service to EC2 instances in the production environment.

The quality assurance (QA) team requests permission to inspect the build artifact before the deployment to the production environment occurs. The QA team wants to run an internal penetration testing tool to conduct manual tests. The tool will be invoked by a REST API call.

Which combination of actions should the DevOps engineer take to fulfill this request? (Choose two.)

A company runs an application in an Auto Scaling group of Amazon EC2 instances behind an Application Load Balancer (ALB). The EC2 instances run Docker containers that make requests to a MySQL database that runs on separate EC2 instances. A DevOps engineer needs to update the application to use a serverless architecture. Which solution will meet this requirement with the FEWEST changes?

A company deployed an Amazon CloudFront distribution that accepts requests and routes to an Amazon API Gateway HTTP API. During a recent security audit, the company discovered that requests from the internet could reach the HTTP API without using the CloudFront distribution.

A DevOps engineer must ensure that connections to the HTTP API use the CloudFront distribution.

Which solution will meet these requirements?

A company runs an application on Amazon EKS. The company needs comprehensive logging for control plane and nodes, analyze API requests, and monitor container performance with minimal operational overhead.

Which solution meets these requirements?

A company has microservices running in AWS Lambda that read data from Amazon DynamoDB. The Lambda code is manually deployed by developers after successful testing The company now needs the tests and deployments be automated and run in the cloud Additionally, traffic to the new versions of each microservice should be incrementally shifted over time after deployment.

What solution meets all the requirements, ensuring the MOST developer velocity?

A company deploys updates to its Amazon API Gateway API several times a week by using an AWS CodePipeline pipeline. As part of the update process the company exports the JavaScript SDK for the API from the API. Gateway console and uploads the SDK to an Amazon S3 bucket

The company has configured an Amazon CloudFront distribution that uses the S3 bucket as an origin Web client then download the SDK by using the CloudFront distribution's endpoint. A DevOps engineer needs to implement a solution to make the new SDK available automatically during new API deployments.

Which solution will meet these requirements?

A DevOps engineer is working on a data archival project that requires the migration of on-premises data to an Amazon S3 bucket. The DevOps engineer develops a script that incrementally archives on-premises data that is older than 1 month to Amazon S3. Data that is transferred to Amazon S3 is deleted from the on-premises location The script uses the S3 PutObject operation.

During a code review the DevOps engineer notices that the script does not verity whether the data was successfully copied to Amazon S3. The DevOps engineer must update the script to ensure that data is not corrupted during transmission. The script must use MD5 checksums to verify data integrity before the on-premises data is deleted.

Which solutions for the script will meet these requirements'? (Select TWO.)

A company uses an organization in AWS Organizations to manage multiple AWS accounts in multiple OUs. The company is planning to implement a comprehensive account management solution and wants to ensure consistent baseline configurations.

A DevOps engineer is developing a solution to automatically deploy AWS CloudFormation templates to new AWS accounts. The specific CloudFormation template that the solution deploys must vary based on which organizational unit (OU) each new account is placed in.

Which solution will meet these requirements with the LEAST operational overhead?

A DevOps engineer needs to install antivirus software on all Amazon EC2 instances in an AWS account. The EC2 instances run the most recent Amazon Linux version. The solution must detect all instances and use an AWS Systems Manager document to install the software if missing.

Which solution will meet these requirements?

A development team wants to use AWS CloudFormation stacks to deploy an application. However, the developer IAM role does not have the required permissions to provision the resources that are specified in the AWS CloudFormation template. A DevOps engineer needs to implement a solution that allows the developers to deploy the stacks. The solution must follow the principle of least privilege.

Which solution will meet these requirements?

A company uses AWS CodePipeline and AWS CodeDeploy to deploy application code to Amazon EC2 instances. The EC2 instances send application logs and CodeDeploy logs to Amazon CloudWatch.

Recently, the company manually rolled back a deployment because of application errors. The company wants to automate the rollback process when application errors occur.

Which solution will meet these requirements?

A company has an application that uses an Amazon API Gateway REST API, AWS Lambda functions, and an Amazon DynamoDB table. The application currently runs in a single AWS Region. The company wants to make the application highly available across two Regions. User traffic must be routed to the Region that provides the least latency.

Which combination of steps will meet these requirements? (Select THREE.)

A DevOps engineer has implemented a Cl/CO pipeline to deploy an AWS Cloud Format ion template that provisions a web application. The web application consists of an Application Load Balancer (ALB) a target group, a launch template that uses an Amazon Linux 2 AMI an Auto Scaling group of Amazon EC2 instances, a security group and an Amazon RDS for MySQL database The launch template includes user data that specifies a script to install and start the application.

The initial deployment of the application was successful. The DevOps engineer made changes to update the version of the application with the user data. The CI/CD pipeline has deployed a new version of the template However, the health checks on the ALB are now failing The health checks have marked all targets as unhealthy.

During investigation the DevOps engineer notices that the Cloud Formation stack has a status of UPDATE_COMPLETE. However, when the DevOps engineer connects to one of the EC2 instances and checks /varar/log messages, the DevOps engineer notices that the Apache web server failed to start successfully because of a configuration error

How can the DevOps engineer ensure that the CloudFormation deployment will fail if the user data fails to successfully finish running?

A company uses an organization in AWS Organizations to manage multiple AWS accounts The company needs an automated process across all AWS accounts to isolate any compromised Amazon EC2 instances when the instances receive a specific tag.

Which combination of steps will meet these requirements? (Select TWO.)

A company's application has an API that retrieves workload metrics. The company needs to audit, analyze, and visualize these metrics from the application to detect issues at scale.

Which combination of steps will meet these requirements? (Select THREE).

A company wants to decrease the time it takes to develop new features. The company uses AWS CodeBuild and AWS CodeDeploy to build and deploy its applications. The company uses AWS CodePipeline to deploy each microservice with its own CI/CD pipeline. The company needs more visibility into the average time between the release of new features and the average time to recover after a failed deployment. Which solution will provide this visibility with the LEAST configuration effort?

A company has many applications. Different teams in the company developed the applications by using multiple languages and frameworks. The applications run on premises and on different servers with different operating systems. Each team has its own release protocol and process. The company wants to reduce the complexity of the release and maintenance of these applications.

The company is migrating its technology stacks, including these applications, to AWS. The company wants centralized control of source code, a consistent and automatic delivery pipeline, and as few maintenance tasks as possible on the underlying infrastructure.

What should a DevOps engineer do to meet these requirements?

A company needs a strategy for failover and disaster recovery of its data and application. The application uses a MySQL database and Amazon EC2 instances. The company requires a maximum RPO of 2 hours and a maximum RTO of 10 minutes for its data and application at all times.

Which combination of deployment strategies will meet these requirements? (Select TWO.)

A company uses Amazon Elastic Kubernetes Services (Amazon EKS) to host containerized applications that are available in Amazon Elastic Container Registry (Amazon ECR).

The company currently launches EKS clusters in the company's development environment by using the AWS CLI aws eks create-cluster command. The company uses the aws eks create-addon command to install required add-ons. All installed add-ons are currently version compatible with the version of Kubernetes that the company uses. All clusters exclusively use managed node groups for compute capacity.

Some of the EKS clusters require a version upgrade. A DevOps engineer must ensure that upgrades continuously occur within the AWS standard support schedule.

Which solution will meet this requirement with the LEAST operational overhead?

A company must encrypt all AMIs that the company shares across accounts. A DevOps engineer has access to a source account where an unencrypted custom AMI has been built. The DevOps engineer also has access to a target account where an Amazon EC2 Auto Scaling group will launch EC2 instances from the AMI. The DevOps engineer must share the AMI with the target account.

The company has created an AWS Key Management Service (AWS KMS) key in the source account.

Which additional steps should the DevOps engineer perform to meet the requirements? (Choose three.)

A DevOps engineer is architecting a continuous development strategy for a company's software as a service (SaaS) web application running on AWS. For application and security reasons users subscribing to this application are distributed across multiple. Application Load Balancers (ALBs) each of which has a dedicated Auto Scaling group and fleet of Amazon EC2 instances The application does not require a build stage and when it is committed to AWS CodeCommit, the application must trigger a simultaneous deployment to all ALBs Auto Scaling groups and EC2 fleets.

Which architecture will meet these requirements with the LEAST amount of configuration?

A company is migrating its container-based workloads to an AWS Organizations multi-account environment. The environment consists of application workload accounts that the company uses to deploy and run the containerized workloads. The company has also provisioned a shared services account tor shared workloads in the organization.

The company must follow strict compliance regulations. All container images must receive security scanning before they are deployed to any environment. Images can be consumed by downstream deployment mechanisms after the images pass a scan with no critical vulnerabilities. Pre-scan and post-scan images must be isolated from one another so that a deployment can never use pre-scan images.

A DevOps engineer needs to create a strategy to centralize this process.

Which combination of steps will meet these requirements with the LEAST administrative overhead? (Select TWO.)

A company is migrating its on-premises Windows applications and Linux applications to AWS. The company will use automation to launch Amazon EC2 instances to mirror the on-premises configurations. The migrated applications require access to shared storage that uses SMB for Windows and NFS for Linux.

The company is also creating a pilot light disaster recovery (DR) environment in another AWS Region. The company will use automation to launch and configure the EC2 instances in the DR Region. The company needs to replicate the storage to the DR Region.

Which storage solution will meet these requirements?

A company uses AWS Organizations to manage its AWS accounts. The company has a root OU that has a child OU. The root OU has an SCP that allows all actions on all resources. The child OU has an SCP that allows all actions for Amazon DynamoDB and AWS Lambda, and denies all other actions.

The company has an AWS account that is named vendor-data in the child OU. A DevOps engineer has an 1AM user that is attached to the AdministratorAccess 1AM policy in the vendor-data account. The DevOps engineer attempts to launch an Amazon EC2 instance in the vendor-data account but receives an access denied error.

Which change should the DevOps engineer make to launch the EC2 instance in the vendor-data account?

A company hosts applications in its AWS account Each application logs to an individual Amazon CloudWatch log group. The company’s CloudWatch costs for ingestion are increasing

A DevOps engineer needs to Identify which applications are the source of the increased logging costs.

Which solution Will meet these requirements?

A company updated the AWS Cloud Formation template for a critical business application. The stack update process failed due to an error in the updated template and AWS CloudFormation automatically began the stack rollback process Later a DevOps engineer discovered that the application was still unavailable and that the stack was in the UPDATE_ROLLBACK_FAILED state.

Which combination of actions should the DevOps engineer perform so that the stack rollback can complete successfully? (Select TWO.)

A company’s web app runs on EC2 Linux instances and needs to monitor custom metrics for API response and DB query latency across instances with least overhead.

Which solution meets this?

A company has an organization in AWS Organizations with many OUs that contain many AWS accounts. The organization has a dedicated delegated administrator AWS account.

The company needs the accounts in one OU to have server-side encryption enforced for all Amazon Elastic Block Store (Amazon EBS) volumes and Amazon Simple Queue Service (Amazon SQS) queues that are created or updated on an AWS CloudFormation stack.

Which solution will enforce this policy before a CloudFormation stack operation in the accounts of this OU?

A company manages environments for its application in multiple AWS accounts. Each environment account is in a different OU in AWS Organizations.

A DevOps team is responsible for the application deployment process across the environments. The deployment process uses an AWS CodePipeline pipeline in a Shared Services account. The DevOps team members are in the same user group. The team members have administrative access to all accounts through AWS IAM Identity Center.

A recent deployment problem in the development environment required the DevOps team to perform manual steps. The deployment to the production environment then resulted in an incident that caused the pipeline to fail, blocking new deployments for several hours.

A DevOps engineer needs to ensure that only the pipeline can perform deployments in the production environment. The DevOps engineer must have access to the environment in case of an emergency.

Which solution will meet these requirements with the MOST operational efficiency?

A company uses AWS Organizations to manage multiple accounts. Information security policies require that all unencrypted Amazon EBS volumes be marked as non-compliant. A DevOps engineer needs to automatically deploy the solution and ensure that this compliance check is always present.

Which solution will accomplish this?

A company is examining its disaster recovery capability and wants the ability to switch over its daily operations to a secondary AWS Region. The company uses AWS CodeCommit as a source control tool in the primary Region.

A DevOps engineer must provide the capability for the company to develop code in the secondary Region. If the company needs to use the secondary Region, developers can add an additional remote URL to their local Git configuration.

Which solution will meet these requirements?

A company needs to update its order processing application to improve resilience and availability. The application requires a stateful database and uses a single-node Amazon RDS DB instance to store customer orders and transaction history. A DevOps engineer must make the database highly available.

Which solution will meet this requirement?

A DevOps engineer needs to configure an AWS CodePipeline pipeline that publishes container images to an Amazon Elastic Container Registry (Amazon ECR) repository. The pipeline must wait for the previous run to finish and must run when new Git tags are pushed to a Git repository that is connected to AWS CodeConnections. An existing deployment pipeline needs to run in response to the publication of new container images.

Which solution will meet these requirements?

A DevOps team supports an application that runs on a large number of Amazon EC2 instances in an Auto Scaling group. The DevOps team uses AWS CloudFormation to deploy the EC2 instances. The application recently experienced an issue. A single instance returned errors to a large percentage of requests. The EC2 instance responded as healthy to both Amazon EC2 and Elastic Load Balancing health checks. The DevOps team collects application logs in Amazon CloudWatch by using the embedded metric format. The DevOps team needs to receive an alert if any EC2 instance is responsible for more than half of all errors. Which combination of steps will meet these requirements with the LEAST operational overhead? (Select TWO.)

A company provides an application to customers. The application has an Amazon API Gateway REST API that invokes an AWS Lambda function. On initialization, the Lambda function loads a large amount of data from an Amazon DynamoDB table. The data load process results in long cold-start times of 8-10 seconds. The DynamoDB table has DynamoDB Accelerator (DAX) configured.

Customers report that the application intermittently takes a long time to respond to requests. The application receives thousands of requests throughout the day. In the middle of the day, the application experiences 10 times more requests than at any other time of the day. Near the end of the day, the application's request volume decreases to 10% of its normal total.

A DevOps engineer needs to reduce the latency of the Lambda function at all times of the day.

Which solution will meet these requirements?

A company uses AWS Organizations to manage its AWS accounts. The organization root has a child OU that is named Department. The Department OU has a child OU that is named Engineering. The default FullAWSAccess policy is attached to the root, the Department OU. and the Engineering OU.

The company has many AWS accounts in the Engineering OU. Each account has an administrative 1AM role with the AdmmistratorAccess 1AM policy attached. The default FullAWSAccessPolicy is also attached to each account.

A DevOps engineer plans to remove the FullAWSAccess policy from the Department OU The DevOps engineer will replace the policy with a policy that contains an Allow statement for all Amazon EC2 API operations.

What will happen to the permissions of the administrative 1AM roles as a result of this change'?

A company wants to use AWS CloudFormation for infrastructure deployment. The company has strict tagging and resource requirements and wants to limit the deployment to two Regions. Developers will need to deploy multiple versions of the same application.

Which solution ensures resources are deployed in accordance with company policy?

A company wants governance where only specific Regions and services can be used, with centralized AD authentication and job-function-based roles.

Which solution meets these requirements?

A large company recently acquired a small company. The large company invited the small company to join the large company's existing organization in AWS Organizations as a new OU. A DevOps engineer determines that the small company needs to launch t3.small Amazon EC2 instance types for the company's application workloads. The small company needs to deploy the instances only within US-based AWS Regions. The DevOps engineer needs to use an SCP in the small company's new OU to ensure that the small company can launch only the required instance types. Which solution will meet these requirements?

A company is testing a web application that runs on Amazon EC2 instances behind an Application Load Balancer. The instances run in an Auto Scaling group across multiple Availability Zones. The company uses a blue green deployment process with immutable instances when deploying new software.

During testing users are being automatically logged out of the application at random times. Testers also report that when a new version of the application is deployed all users are logged out. The development team needs a solution to ensure users remain logged m across scaling events and application deployments.

What is the MOST operationally efficient way to ensure users remain logged in?

A company is using AWS Organizations to centrally manage its AWS accounts. The company has turned on AWS Config in each member account by using AWS Cloud Formation StackSets The company has configured trusted access in Organizations for AWS Config and has configured a member account as a delegated administrator account for AWS Config

A DevOps engineer needs to implement a new security policy The policy must require all current and future AWS member accounts to use a common baseline of AWS Config rules that contain remediation actions that are managed from a central account Non-administrator users who can access member accounts must not be able to modify this common baseline of AWS Config rules that are deployed into each member account

Which solution will meet these requirements?

A DevOps engineer is building a multistage pipeline with AWS CodePipeline to build, verify, stage, test, and deploy an application. A manual approval stage is required between the test stage and the deploy stage. The development team uses a custom chat tool with webhook support that requires near-real-time notifications.

How should the DevOps engineer configure status updates for pipeline activity and approval requests to post to the chat tool?

A company is reviewing its 1AM policies. One policy written by the DevOps engineer has been (lagged as too permissive. The policy is used by an AWS Lambda function that issues a stop command to Amazon EC2 instances tagged with Environment: NonProduccion over the weekend. The current policy is:

What changes should the engineer make to achieve a policy ot least permission? (Select THREE.)

A development team is using AWS CodeCommit to version control application code and AWS CodePipeline to orchestrate software deployments. The team has decided to use a remote main branch as the trigger for the pipeline to integrate code changes. A developer has pushed code changes to the CodeCommit repository, but noticed that the pipeline had no reaction, even after 10 minutes.

Which of the following actions should be taken to troubleshoot this issue?

A company uses Amazon S3 to store proprietary information. The development team creates buckets for new projects on a daily basis. The security team wants to ensure that all existing and future buckets have encryption logging and versioning enabled. Additionally, no buckets should ever be publicly read or write accessible.

What should a DevOps engineer do to meet these requirements?

A company has deployed an application in a single AWS Region. The application backend uses Amazon DynamoDB tables and Amazon S3 buckets.

The company wants to deploy the application in a secondary Region. The company must ensure that the data in the DynamoDB tables and the S3 buckets persists across both Regions. The data must also immediately propagate across Regions.

Which solution will meet these requirements with the MOST operational efficiency?

A DevOps engineer is building a continuous deployment pipeline for a serverless application that uses AWS Lambda functions. The company wants to reduce the customer impact of an unsuccessful deployment. The company also wants to monitor for issues.

Which deploy stage configuration will meet these requirements?

A DevOps engineer used an AWS Cloud Formation custom resource to set up AD Connector. The AWS Lambda function ran and created AD Connector, but Cloud Formation is not transitioning from CREATE_IN_PROGRESS to CREATE_COMPLETE.

Which action should the engineer take to resolve this issue?

A company has a new AWS account that teams will use to deploy various applications. The teams will create many Amazon S3 buckets for application- specific purposes and to store AWS CloudTrail logs. The company has enabled Amazon Macie for the account.

A DevOps engineer needs to optimize the Macie costs for the account without compromising the account's functionality.

Which solutions will meet these requirements? (Select TWO.)

A global company manages multiple AWS accounts by using AWS Control Tower. The company hosts internal applications and public applications.

Each application team in the company has its own AWS account for application hosting. The accounts are consolidated in an organization in AWS Organizations. One of the AWS Control Tower member accounts serves as a centralized DevOps account with CI/CD pipelines that application teams use to deploy applications to their respective target AWS accounts. An 1AM role for deployment exists in the centralized DevOps account.

An application team is attempting to deploy its application to an Amazon Elastic Kubernetes Service (Amazon EKS) cluster in an application AWS account. An 1AM role for deployment exists in the application AWS account. The deployment is through an AWS CodeBuild project that is set up in the centralized DevOps account. The CodeBuild project uses an 1AM service role for CodeBuild. The deployment is failing with an Unauthorized error during attempts to connect to the cross-account EKS cluster from CodeBuild.

Which solution will resolve this error?

A company configured an Amazon S3 event source for an AWS Lambda function. The company needs the Lambda function to run when a new object is created or an existing object is modified in a specific S3 bucket. The Lambda function will use the S3 bucket name and the S3 object key of the incoming event to read the contents of the new or modified S3 object. The Lambda function will parse the contents and save the parsed contents to an Amazon DynamoDB table.

The Lambda function's execution role has permissions to 'eari from the S3 bucket and to Write to the DynamoDB table. During testing, a DevOpS engineer discovers that the Lambda fund on does rot run when objects are added to the S3 bucket or when existing objects are modified.

Which solution will resolve these problems?

A company has deployed a critical application in two AWS Regions. The application uses an Application Load Balancer (ALB) in both Regions. The company has Amazon Route 53 alias DNS records for both ALBs.

The company uses Amazon Route 53 Application Recovery Controller to ensure that the application can fail over between the two Regions. The Route 53 ARC configuration includes a routing control for both Regions. The company uses Route 53 ARC to perform quarterly disaster recovery (DR) tests.

During the most recent DR test, a DevOps engineer accidentally turned off both routing controls. The company needs to ensure that at least one routing control is turned on at all times.

Which solution will meet these requirements?

A company's application runs on Amazon EC2 instances. The application writes to a log file that records the username, date, time: and source IP address of the login. The log is published to a log group in Amazon CloudWatch Logs

The company is performing a root cause analysis for an event that occurred on the previous day The company needs to know the number of logins for a specific user from the past 7 days

Which solution will provide this information'?

A company's application development team uses Linux-based Amazon EC2 instances as bastion hosts. Inbound SSH access to the bastion hosts is restricted to specific IP addresses, as defined in the associated security groups. The company's security team wants to receive a notification if the security group rules are modified to allow SSH access from any IP address.

What should a DevOps engineer do to meet this requirement?

An Amazon EC2 instance is running in a VPC and needs to download an object from a restricted Amazon S3 bucket. When the DevOps engineer tries to download the object, an AccessDenied error is received,

What are the possible causes tor this error? (Select TWO,)

A company deploys its corporate infrastructure on AWS across multiple AWS Regions and Availability Zones. The infrastructure is deployed on Amazon EC2 instances and connects with AWS loT Greengrass devices. The company deploys additional resources on on-premises servers that are located in the corporate headquarters.

The company wants to reduce the overhead involved in maintaining and updating its resources. The company's DevOps team plans to use AWS Systems Manager to implement automated management and application of patches. The DevOps team confirms that Systems Manager is available in the Regions that the resources are deployed m Systems Manager also is available in a Region near the corporate headquarters.

Which combination of steps must the DevOps team take to implement automated patch and configuration management across the company's EC2 instances loT devices and on-premises infrastructure? (Select THREE.)

A company builds a container image in an AWS CodeBuild project by running Docker commands. After the container image is built, the CodeBuild project uploads the container image to an Amazon S3 bucket. The CodeBuild project has an IAM service role that has permissions to access the S3 bucket.

A DevOps engineer needs to replace the S3 bucket with an Amazon Elastic Container Registry (Amazon ECR) repository to store the container images. The

DevOps engineer creates an ECR private image repository in the same AWS Region of the CodeBuild project. The DevOps engineer adjusts the IAM service role with the permissions that are necessary to work with the new ECR repository. The DevOps engineer also places new repository information into the docker build command and the docker push command that are used in the buildspec.yml file.

When the CodeBuild project runs a build job, the job fails when the job tries to access the ECR repository.

Which solution will resolve the issue of failed access to the ECR repository?

A DevOps engineer has developed an AWS Lambda function The Lambda function starts an AWS CloudFormation drift detection operation on all supported resources for a specific CloudFormation stack The Lambda function then exits Its invocation The DevOps engineer has created an Amazon EventBrdge scheduled rule that Invokes the Lambda function every hour. An Amazon Simple Notification Service (Amazon SNS) topic already exists In the AWS account. The DevOps engineer has subscribed to the SNS topic to receive notifications

The DevOps engineer needs to receive a notification as soon as possible when drift is detected in this specific stack configuration.

Which solution Will meet these requirements?

A company runs an application on an Amazon Elastic Container Service (Amazon ECS) service by using the AWS Fargate launch type. The application consumes messages from an Amazon Simple Queue Service (Amazon SQS) queue. The application can take several minutes to process each message from the queue. When the application processes a message, the application reads a file from an Amazon S3 bucket and processes the data in the file. The application writes the processed output to a second S3 bucket. The company uses Amazon CloudWatch Logs to monitor processing errors and to ensure that the application processes messages successfully.

The SQS queue typically receives a low volume of messages. However, occasionally the queue receives higher volumes of messages. A DevOps engineer needs to implement a solution to reduce the processing time of message bursts.

Which solution will meet this requirement in the MOST cost-effective way?

A company has multiple member accounts that are part of an organization in AWS Organizations. The security team needs to review every Amazon EC2 security group and their inbound and outbound rules. The security team wants to programmatically retrieve this information from the member accounts using an AWS Lambda function in the management account of the organization.

Which combination of access changes will meet these requirements? (Choose three.)

A company uses AWS Organizations to manage multiple AWS accounts. The company needs a solution to improve the company's management of AWS resources in a production account.

The company wants to use AWS CloudFormation to manage all manually created infrastructure. The company must have the ability to strictly control who can make manual changes to AWS infrastructure. The solution must ensure that users can deploy new infrastructure only by making changes to a CloudFormation template that is stored in an AWS CodeConnections compatible Git provider.

Which combination of steps will meet these requirements with the LEAST implementation effort? (Select THREE).

A company uses an Amazon Elastic Kubernetes Service (Amazon EKS) cluster to deploy its web applications on containers. The web applications contain confidential data that cannot be decrypted without specific credentials.

A DevOps engineer has stored the credentials in AWS Secrets Manager. The secrets are encrypted by an AWS Key Management Service (AWS KMS) customer managed key. A Kubernetes service account for a third-party tool makes the secrets available to the applications. The service account assumes an IAM role that the company created to access the secrets.

The service account receives an Access Denied (403 Forbidden) error while trying to retrieve the secrets from Secrets Manager.

What is the root cause of this issue?

A company has a mobile application that makes HTTP API calls to an Application Load Balancer (ALB). The ALB routes requests to an AWS Lambda function. Many different versions of the application are in use at any given time, including versions that are in testing by a subset of users. The version of the application is defined in the user-agent header that is sent with all requests to the API.

After a series of recent changes to the API, the company has observed issues with the application. The company needs to gather a metric for each API operation by response code for each version of the application that is in use. A DevOps engineer has modified the Lambda function to extract the API operation name, version information from the user-agent header and response code.

Which additional set of actions should the DevOps engineer take to gather the required metrics?

A media company has several thousand Amazon EC2 instances in an AWS account. The company is using Slack and a shared email inbox for team communications and important updates. A DevOps engineer needs to send all AWS-scheduled EC2 maintenance notifications to the Slack channel and the shared inbox. The solution must include the instances' Name and Owner tags.

Which solution will meet these requirements?

A company uses an Amazon Aurora PostgreSQL global database that has two secondary AWS Regions. A DevOps engineer has configured the database parameter group to guarantee an RPO of 60 seconds. Write operations on the primary cluster are occasionally blocked because of the RPO setting.

The DevOps engineer needs to reduce the frequency of blocked write operations.

Which solution will meet these requirements?

A company uses AWS CloudFormation to deploy application environments. A deployment failed due to manual modifications in stack resources. The DevOps engineer wants to detect manual modifications and alert the DevOps lead with the least effort.

Which solution meets these requirements?

A company wants to ensure that their EC2 instances are secure. They want to be notified if any new vulnerabilities are discovered on their instances and they also want an audit trail of all login activities on the instances.

Which solution will meet these requirements'?

A company is building a serverless application that uses AWS Lambda functions to process data.

A BeginResponse Lambda function initializes data in response to specific application events. The company needs to ensure that a large number of Lambda functions are invoked after the BeginResponse Lambda function runs. Each Lambda function must be invoked in parallel and depends on only the outputs of the BeginResponse Lambda function. Each Lambda function has retry logic for invocation and must be able to fine-tune concurrency without losing data.

Which solution will meet these requirements with the MOST operational efficiency?

A DevOps engineer wants to find a solution to migrate an application from on premises to AWS The application is running on Linux and needs to run on specific versions of Apache Tomcat HAProxy and Varnish Cache to function properly. The application's operating system-level parameters require tuning The solution must include a way to automate the deployment of new application versions. The infrastructure should be scalable and faulty servers should be replaced automatically.

Which solution should the DevOps engineer use?

A company that runs many workloads on AWS has an Amazon EBS spend that has increased over time. The DevOps team notices there are many unattached

EBS volumes. Although there are workloads where volumes are detached, volumes over 14 days old are stale and no longer needed. A DevOps engineer has been tasked with creating automation that deletes unattached EBS volumes that have been unattached for 14 days.

Which solution will accomplish this?

A DevOps engineer is building the infrastructure for an application. The application needs to run on an Amazon Elastic Kubernetes Service (Amazon EKS) cluster that includes Amazon EC2 instances. The EC2 instances need to use an Amazon Elastic File System (Amazon EFS) file system as a storage backend. The Amazon EFS Container Storage Interface (CSI) driver is installed on the EKS cluster.

When the DevOps engineer starts the application, the EC2 instances do not mount the EFS file system.

Which solutions will fix the problem? (Select THREE.)

A company runs an application on an Amazon Elastic Kubernetes Service (Amazon EKS) cluster in the company's primary AWS Region and secondary Region. The company uses Auto Scaling groups to distribute each EKS cluster’s worker nodes across multiple Availability Zones. Both EKS clusters also have an Application Load Balancer (ALB) to distribute incoming traffic.

The company wants to deploy a new stateless application to its infrastructure. The company requires a multi-Region, fault tolerant solution.

Which solution will meet these requirements?

A company is storing 100 GB of log data in csv format in an Amazon S3 bucket SQL developers want to query this data and generate graphs to visualize it. The SQL developers also need an efficient automated way to store metadata from the csv file.

Which combination of steps will meet these requirements with the LEAST amount of effort? (Select THREE.)

A company is hosting a static website from an Amazon S3 bucket. The website is available to customers at example.com. The company uses an Amazon Route 53 weighted routing policy with a TTL of 1 day. The company has decided to replace the existing static website with a dynamic web application. The dynamic web application uses an Application Load Balancer (ALB) in front of a fleet of Amazon EC2 instances.

On the day of production launch to customers, the company creates an additional Route 53 weighted DNS record entry that points to the ALB with a weight of 255 and a TTL of 1 hour. Two days later, a DevOps engineer notices that the previous static website is displayed sometimes when customers navigate to example.com.

How can the DevOps engineer ensure that the company serves only dynamic content for example.com?

A company has developed an AWS Lambda function that handles orders received through an API. The company is using AWS CodeDeploy to deploy the Lambda function as the final stage of a CI/CD pipeline.

A DevOps engineer has noticed there are intermittent failures of the ordering API for a few seconds after deployment. After some investigation the DevOps engineer believes the failures are due to database changes not having fully propagated before the Lambda function is invoked

How should the DevOps engineer overcome this?

A company deploys a web application on Amazon EC2 instances that are behind an Application Load Balancer (ALB). The company stores the application code in an AWS CodeConnections compatible Git repository.

When the company merges code to the main branch, an AWS CodeBuild project is initiated. The CodeBuild project compiles the code, stores the packaged code in AWS CodeArtifact, and invokes AWS Systems Manager Run Command to deploy the packaged code to the EC2 instances.

Previous deployments have resulted in defects, EC2 instances that were not running the latest version of the packaged code, and inconsistencies between instances. A DevOps engineer needs to improve the reliability of the deployment solution.

Which combination of actions will meet this requirement? (Select TWO.)

An ecommerce company is receiving reports that its order history page is experiencing delays in reflecting the processing status of orders. The order processing system consists of an AWS Lambda function that uses reserved concurrency. The Lambda function processes order messages from an Amazon Simple Queue Service (Amazon SQS) queue and inserts processed orders into an Amazon DynamoDB table. The DynamoDB table has auto scaling enabled for read and write capacity.

Which actions should a DevOps engineer take to resolve this delay? (Choose two.)

A company has configured Amazon RDS storage autoscaling for its RDS DB instances. A DevOps team needs to visualize the autoscaling events on an Amazon CloudWatch dashboard. Which solution will meet this requirement?

A company detects unusual login attempts in many of its AWS accounts. A DevOps engineer must implement a solution that sends a notification to the company's security team when multiple failed login attempts occur. The DevOps engineer has already created an Amazon Simple Notification Service (Amazon SNS) topic and has subscribed the security team to the SNS topic.

Which solution will provide the notification with the LEAST operational effort?

A company manages multiple AWS accounts in AWS Organizations. The company's security policy states that AWS account root user credentials for member accounts must not be used. The company monitors access to the root user credentials.

A recent alert shows that the root user in a member account launched an Amazon EC2 instance. A DevOps engineer must create an SCP at the organization's root level that will prevent the root user in member accounts from making any AWS service API calls.

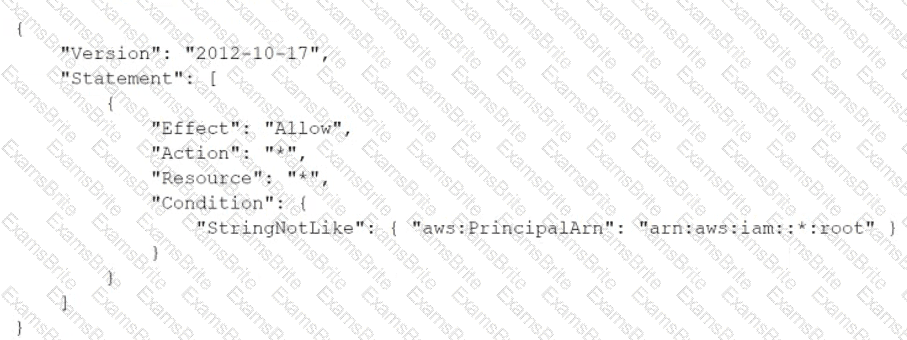

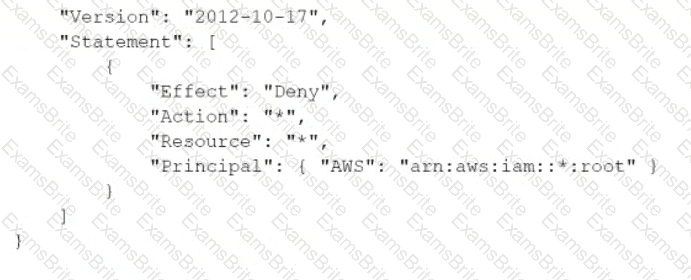

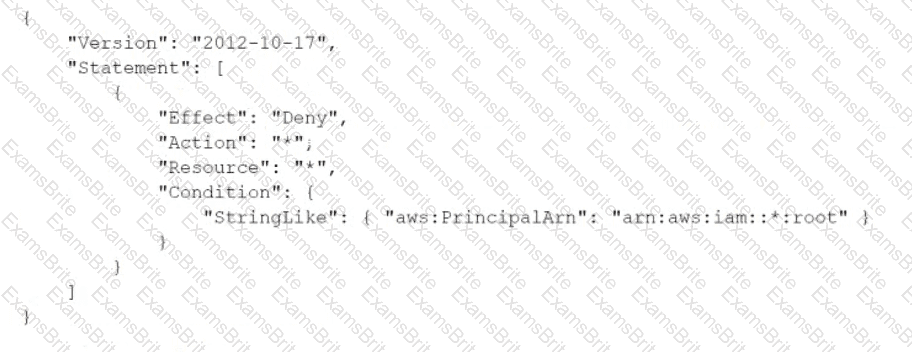

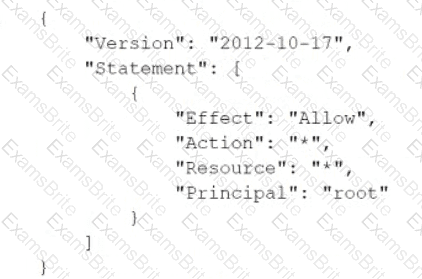

Which SCP will meet these requirements?

A)

B)

C)

D)

A company is developing code and wants to use semantic versioning. The company's DevOps team needs to create a pipeline for compiling the code. The team also needs to manage versions of the compiled code. If the code uses any open source libraries, the libraries must also be cached in the build process. Which solution will meet these requirements?

A company's security policies require the use of security hardened AMIS in production environments. A DevOps engineer has used EC2 Image Builder to create a pipeline that builds the AMIs on a recurring schedule.

The DevOps engineer needs to update the launch templates of the companys Auto Scaling groups. The Auto Scaling groups must use the newest AMIS during the launch of Amazon EC2 instances.

Which solution will meet these requirements with the MOST operational efficiency?

A company uses a pipeline in AWS CodePipeline to deploy an application. The company created an AWS Fault Injection Service (AWS FIS) experiment template to test the resiliency of the application. A DevOps engineer needs to integrate the experiment into the pipeline.

Which solution will meet this requirement?

A company uses containers for its applications The company learns that some container Images are missing required security configurations

A DevOps engineer needs to implement a solution to create a standard base image The solution must publish the base image weekly to the us-west-2 Region, us-east-2 Region, and eu-central-1 Region.

Which solution will meet these requirements?

A development team manually builds an artifact locally and then places it in an Amazon S3 bucket. The application has a local cache that must be cleared when a deployment occurs. The team runs a command to do this downloads the artifact from Amazon S3 and unzips the artifact to complete the deployment.

A DevOps team wants to migrate to a CI/CD process and build in checks to stop and roll back the deployment when a failure occurs. This requires the team to track the progression of the deployment.

Which combination of actions will accomplish this? (Select THREE)

An ecommerce company uses a large number of Amazon Elastic Block Store (Amazon EBS) backed Amazon EC2 instances. To decrease manual work across all the instances, a DevOps engineer is tasked with automating restart actions when EC2 instance retirement events are scheduled.

How can this be accomplished?

A company manages a web application that runs on Amazon EC2 instances behind an Application Load Balancer (ALB). The EC2 instances run in an Auto Scaling group across multiple Availability Zones. The application uses an Amazon RDS for MySQL DB instance to store the data. The company has configured Amazon Route 53 with an alias record that points to the ALB.

A new company guideline requires a geographically isolated disaster recovery (DR> site with an RTO of 4 hours and an RPO of 15 minutes.

Which DR strategy will meet these requirements with the LEAST change to the application stack?

A company has proprietary data available by using an Amazon CloudFront distribution. The company needs to ensure that the distribution is accessible by only users from the corporate office that have a known set of IP address ranges. An AWS WAF web ACL is associated with the distribution and has a default action set to Count.

Which solution will meet these requirements with the LEAST operational overhead?

A company has an AWS account named PipelineAccount. The account manages a pipeline in AWS CodePipeline. The account uses an IAM role named CodePipeline_Service_Role and produces an artifact that is stored in an Amazon S3 bucket. The company uses a customer managed AWS KMS key to encrypt objects in the S3 bucket.

A DevOps engineer wants to configure the pipeline to use an AWS CodeDeploy application in an AWS account named CodeDeployAccount to deploy the produced artifact.

The DevOps engineer updates the KMS key policy to grant the CodeDeployAccount account permission to use the key. The DevOps engineer configures an IAM role named DevOps_Role in the CodeDeployAccount account that has access to the CodeDeploy resources that the pipeline requires. The DevOps engineer updates an Amazon EC2 instance role that operates within the CodeDeployAccount account to allow access to the S3 bucket and the KMS key that is in the PipelineAccount account.

Which additional steps will meet these requirements?

A company has many AWS accounts. During AWS account creation the company uses automation to create an Amazon CloudWatch Logs log group in every AWS Region that the company operates in. The automaton configures new resources in the accounts to publish logs to the provisioned log groups in their Region.

The company has created a logging account to centralize the logging from all the other accounts. A DevOps engineer needs to aggregate the log groups from all the accounts to an existing Amazon S3 bucket in the logging account.

Which solution will meet these requirements in the MOST operationally efficient manner?

A company deploys an application to Amazon EC2 instances. The application runs Amazon Linux 2 and uses AWS CodeDeploy. The application has the following file structure for its code repository:

appspec.yml

config/config.txt application/web

The appspec yml file has the following contents in the files section

files:

-source: config/config.txt

destination: /usr/local/src/config.txt

-source: /

destination: /var/www/html

The appspec.yml file has the following contents in the files section:

What will the result be for the deployment of the config.txt file?