Confluent Certified Developer for Apache Kafka Certification Examination

Last Update Feb 28, 2026

Total Questions : 90

We are offering FREE CCDAK Confluent exam questions. All you do is to just go and sign up. Give your details, prepare CCDAK free exam questions and then go for complete pool of Confluent Certified Developer for Apache Kafka Certification Examination test questions that will help you more.

(You create a topic with five partitions.

What can you assume about messages read from that topic by a single consumer group?)

(You want to read messages from all partitions of a topic in every consumer instance of your application.

How do you do this?)

You are sending messages to a Kafka cluster in JSON format and want to add more information related to each message:

Format of the message payload

Message creation time

A globally unique identifier that allows the message to be traced through the systemWhere should this additional information be set?

You have a consumer group with default configuration settings reading messages from your Kafka cluster.

You need to optimize throughput so the consumer group processes more messages in the same amount of time.

Which change should you make?

You need to correctly join data from two Kafka topics.

Which two scenarios will allow for co-partitioning?

(Select two.)

You are composing a REST request to create a new connector in a running Connect cluster. You invoke POST /connectors with a configuration and receive a 409 (Conflict) response.

What are two reasons for this response? (Select two.)

(You want to enrich the content of a topic by joining it with key records from a second topic.

The two topics have a different number of partitions.

Which two solutions can you use?

Select two.)

You are writing a producer application and need to ensure proper delivery. You configure the producer with acks=all.

Which two actions should you take to ensure proper error handling?

(Select two.)

(You are designing a stream pipeline to monitor the real-time location of GPS trackers, where historical location data is not required.

Each event has:

• Key: trackerId

• Value: latitude, longitude

You need to ensure that the latest location for each tracker is always retained in the Kafka topic.

Which topic configuration parameter should you set?)

Your company has three Kafka clusters: Development, Testing, and Production.

The Production cluster is running out of storage, so you add a new node.

Which two statements about the new node are true?

(Select two.)

You have a Kafka consumer in production actively reading from a critical topic.

You need to update the offset of your consumer to start reading from the beginning of the topic.

Which action should you take?

A stream processing application is consuming from a topic with five partitions. You run three instances of the application. Each instance has num.stream.threads=5.

You need to identify the number of stream tasks that will be created and how many will actively consume messages from the input topic.

Which configuration determines how many bytes of data are collected before sending messages to the Kafka broker?

(You are developing a Java application that includes a Kafka consumer.

You need to integrate Kafka client logs with your own application logs.

Your application is using the Log4j2 logging framework.

Which Java library dependency must you include in your project?)

You need to configure a sink connector to write records that fail into a dead letter queue topic. Requirements:

Topic name: DLQ-Topic

Headers containing error context must be added to the messagesWhich three configuration parameters are necessary?(Select three.)

Your application is consuming from a topic configured with a deserializer.

It needs to be resilient to badly formatted records ("poison pills"). You surround the poll() call with a try/catch for RecordDeserializationException.

You need to log the bad record, skip it, and continue processing.

Which action should you take in the catch block?

You are experiencing low throughput from a Java producer.

Metrics show low I/O thread ratio and low I/O thread wait ratio.

What is the most likely cause of the slow producer performance?

(You are implementing a Kafka Streams application to process financial transactions.

Each transaction must be processed exactly once to ensure accuracy.

The application reads from an input topic, performs computations, and writes results to an output topic.

During testing, you notice duplicate entries in the output topic, which violates the exactly-once processing requirement.

You need to ensure exactly-once semantics (EOS) for this Kafka Streams application.

Which step should you take?)

(You are experiencing low throughput from a Java producer.

Kafka producer metrics show a low I/O thread ratio and low I/O thread wait ratio.

What is the most likely cause of the slow producer performance?)

(You are configuring a source connector that writes records to an Orders topic.

You need to send some of the records to a different topic.

Which Single Message Transform (SMT) is best suited for this requirement?)

You are creating a Kafka Streams application to process retail data.

Match the input data streams with the appropriate Kafka Streams object.

An application is consuming messages from Kafka.

The application logs show that partitions are frequently being reassigned within the consumer group.

Which two factors may be contributing to this?

(Select two.)

(You are building real-time streaming applications using Kafka Streams.

Your application has a custom transformation.

You need to define custom processors in Kafka Streams.

Which tool should you use?)

Which two statements about Kafka Connect Single Message Transforms (SMTs) are correct?

(Select two.)

You create a producer that writes messages about bank account transactions from tens of thousands of different customers into a topic.

Your consumers must process these messages with low latency and minimize consumer lag

Processing takes ~6x longer than producing

Transactions for each bank account must be processed in orderWhich strategy should you use?

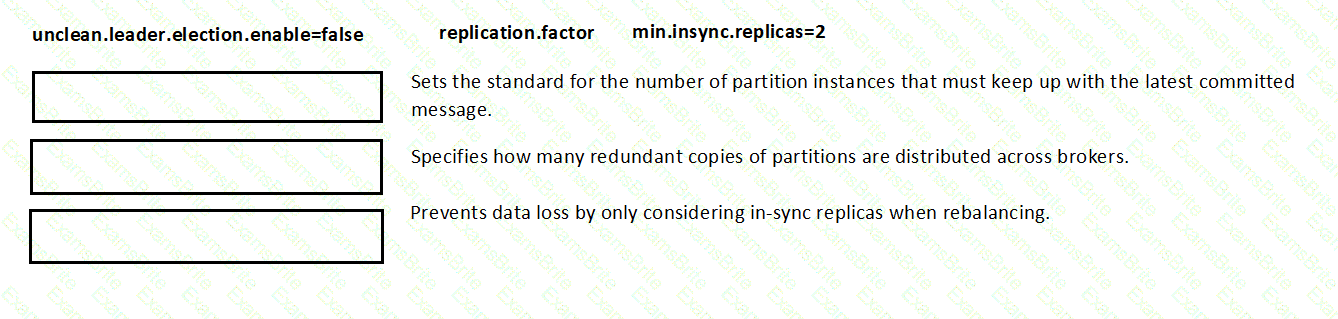

Match the topic configuration setting with the reason the setting affects topic durability.

(You are given settings like unclean.leader.election.enable=false, replication.factor, min.insync.replicas=2)