Google Cloud Certified - Professional Cloud DevOps Engineer Exam

Last Update Feb 28, 2026

Total Questions : 201

We are offering FREE Professional-Cloud-DevOps-Engineer Google exam questions. All you do is to just go and sign up. Give your details, prepare Professional-Cloud-DevOps-Engineer free exam questions and then go for complete pool of Google Cloud Certified - Professional Cloud DevOps Engineer Exam test questions that will help you more.

You are designing a continuous delivery (CD) strategy for a new serverless application. The application is packaged as a container image, stored in Artifact Registry, and deployed to Cloud Run. Your design requires a staging environment, a fully-managed Google Cloud service, mandatory manual approval for production deployments, and a phased rollout to production. Your solution should minimize administrative overhead. What should you do?

You use a multiple step Cloud Build pipeline to build and deploy your application to Google Kubernetes Engine (GKE). You want to integrate with a third-party monitoring platform by performing a HTTP POST of the build information to a webhook. You want to minimize the development effort. What should you do?

You are responsible for creating and modifying the Terraform templates that define your Infrastructure. Because two new engineers will also be working on the same code, you need to define a process and adopt a tool that will prevent you from overwriting each other's code. You also want to ensure that you capture all updates in the latest version. What should you do?

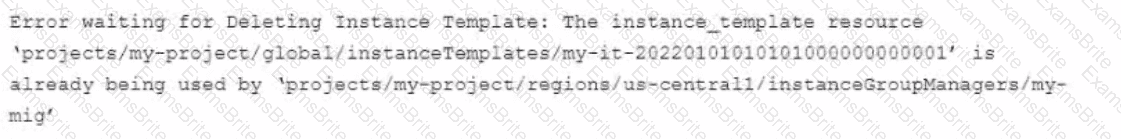

You use Terraform to manage an application deployed to a Google Cloud environment The application runs on instances deployed by a managed instance group The Terraform code is deployed by using aCI/CD pipeline When you change the machine type on the instance template used by the managed instance group, the pipeline fails at the terraform apply stage with the following error message

You need to update the instance template and minimize disruption to the application and the number of pipeline runs What should you do?

You support a trading application written in Python and hosted on App Engine flexible environment. You want to customize the error information being sent to Stackdriver Error Reporting. What should you do?

You need to introduce postmortems into your organization. You want to ensure that the postmortem process is well received. What should you do?

Choose 2 answers

Your application runs on Google Cloud Platform (GCP). You need to implement Jenkins for deploying application releases to GCP. You want to streamline the release process, lower operational toil, and keep user data secure. What should you do?

Your team of Infrastructure DevOps Engineers is growing, and you are starting to use Terraform to manage infrastructure. You need a way to implement code versioning and to share code with other team members. What should you do?

Your company follows Site Reliability Engineering principles. You are writing a postmortem for an incident, triggered by a software change, that severely affected users. You want to prevent severe incidents from happening in the future. What should you do?

You are creating a CI/CD pipeline in Cloud Build to build an application container image The application code is stored in GitHub Your company requires thai production image builds are only run against the main branch and that the change control team approves all pushes to the main branch You want the image build to be as automated as possible What should you do?

Choose 2 answers

You have deployed a fleet Of Compute Engine instances in Google Cloud. You need to ensure that monitoring metrics and logs for the instances are visible in Cloud Logging and Cloud Monitoring by your company's operations and cyber

security teams. You need to grant the required roles for the Compute Engine service account by using Identity and Access Management (IAM) while following the principle of least privilege. What should you do?

You are running an application on Compute Engine and collecting logs through Stackdriver. You discover that some personally identifiable information (Pll) is leaking into certain log entry fields. All Pll entries begin with the text userinfo. You want to capture these log entries in a secure location for later review and prevent them from leaking to Stackdriver Logging. What should you do?

Your team is running microservices in Google Kubernetes Engine (GKE) You want to detect consumption of an error budget to protect customers and define release policies What should you do?

You are implementing a CI'CD pipeline for your application in your company s multi-cloud environment Your application is deployed by using custom Compute Engine images and the equivalent in other cloud providers You need to implement a solution that will enable you to build and deploy the images to your current environment and is adaptable to future changes Which solution stack should you use'?

You are writing a postmortem for an incident that severely affected users. You want to prevent similar incidents in the future. Which two of the following sections should you include in the postmortem? (Choose two.)

You are using Stackdriver to monitor applications hosted on Google Cloud Platform (GCP). You recently deployed a new application, but its logs are not appearing on the Stackdriver dashboard.

You need to troubleshoot the issue. What should you do?

Your company experiences bugs, outages, and slowness in its production systems. Developers use the production environment for new feature development and bug fixes. Configuration and experiments are done in the production environment, causing outages for users. Testers use the production environmentfor load testing, which often slows the production systems. You need to redesign the environment to reduce the number of bugs and outages in production and to enable testers to load test new features. What should you do?

You work for a global organization and run a service with an availability target of 99% with limited engineering resources. For the current calendar month you noticed that the service has 99 5% availability. You must ensure that your service meets the defined availability goals and can react to business changes including the upcoming launch of new features You also need to reduce technical debt while minimizing operational costs You want to follow Google-recommended practices What should you do?

You are creating Cloud Logging sinks to export log entries from Cloud Logging to BigQuery for future analysis Your organization has a Google Cloud folder named Dev that contains development projects and a folder named Prod that contains production projects Log entries for development projects must be exported to dev_dataset. and log entries for production projects must be exported to prod_datasetYou need to minimize the number of log sinks created and you want to ensure that the log sinks apply to future projects What should you do?

You support a production service that runs on a single Compute Engine instance. You regularly need to spend time on recreating the service by deleting the crashing instance and creating a new instance based on the relevant image. You want to reduce the time spent performing manual operations while following Site Reliability Engineering principles. What should you do?

You are performing a semiannual capacity planning exercise for your flagship service. You expect a service user growth rate of 10% month-over-month over the next six months. Your service is fully containerized and runs on Google Cloud Platform (GCP). using a Google Kubernetes Engine (GKE) Standard regional cluster on three zones with cluster autoscaler enabled. You currently consume about 30% of your total deployed CPU capacity, and you require resilience against the failure of a zone. You want to ensure that your users experience minimal negative impact as a result of this growth or as a result of zone failure, while avoiding unnecessary costs. How should you prepare to handle the predicted growth?

You support a Node.js application running on Google Kubernetes Engine (GKE) in production. The application makes several HTTP requests to dependent applications. You want to anticipate which dependent applications might cause performance issues. What should you do?

Your organization is running multiple Google Kubernetes Engine (GKE) clusters in a project. You need to design a highly-available solution to collect and query both domain-specific workload metrics and GKE default metrics across all clusters, while minimizing operational overhead. What should you do?

You deploy a new release of an internal application during a weekend maintenance window when there is minimal user traffic. After the window ends, you learn that one of the new features isn't working as expected in the production environment. After an extended outage, you roll back the new release and deploy a fix. You want to modify your release process to reduce the mean time to recovery so you can avoid extended outages in the future. What should you do?

Choose 2 answers

You are running a real-time gaming application on Compute Engine that has a production and testing environment. Each environment has their own Virtual Private Cloud (VPC) network. The application frontend and backend servers are located on different subnets in the environment's VPC. You suspect there is a malicious process communicating intermittently in your production frontend servers. You want to ensure that network traffic is captured for analysis. What should you do?

You are designing a new Google Cloud organization for a client. Your client is concerned with the risks associated with long-lived credentials created in Google Cloud. You need to design a solution to completely eliminate the risks associated with the use of JSON service account keys while minimizing operational overhead. What should you do?

You are ready to deploy a new feature of a web-based application to production. You want to use Google Kubernetes Engine (GKE) to perform a phased rollout to half of the web server pods.

What should you do?

You support a high-traffic web application and want to ensure that the home page loads in a timely manner. As a first step, you decide to implement a Service Level Indicator (SLI) to represent home page request latency with an acceptable page load time set to 100 ms. What is the Google-recommended way of calculating this SLI?

You are creating and assigning action items in a postmodern for an outage. The outage is over, but you need to address the root causes. You want to ensure that your team handles the action items quickly and efficiently. How should you assign owners and collaborators to action items?

You are designing a new multi-tenant Google Kubernetes Engine (GKE) cluster for a customer. Your customer is concerned with the risks associated with long-lived credentials use. The customer requires that each GKE workload has the minimum Identity and Access Management (IAM) permissions set following the principle of least privilege (PoLP). You need to design an IAM impersonation solution while following Google-recommended practices. What should you do?

Your applications performance in Google Cloud has degraded since the last release You suspect that downstream dependencies might be causing some requests to take longer to complete You need to investigate the issue with your application to determine the cause What should you do?

Your company is developing applications that are deployed on Google Kubernetes Engine (GKE). Each team manages a different application. You need to create the development and production environments for each team, while minimizing costs. Different teams should not be able to access other teams’ environments. What should you do?

You support an application running on App Engine. The application is used globally and accessed from various device types. You want to know the number of connections. You are using Stackdriver Monitoring for App Engine. What metric should you use?

You are running an application in a virtual machine (VM) using a custom Debian image. The image has the Stackdriver Logging agent installed. The VM has the cloud-platform scope. The application is logging information via syslog. You want to use Stackdriver Logging in the Google Cloud Platform Console to visualize the logs. You notice that syslog is not showing up in the "All logs" dropdown list of the Logs Viewer. What is the first thing you should do?

You recently noticed that one Of your services has exceeded the error budget for the current rolling window period. Your company's product team is about to launch a new feature. You want to follow Site Reliability Engineering (SRE) practices.

What should you do?

You support a multi-region web service running on Google Kubernetes Engine (GKE) behind a Global HTTP'S Cloud Load Balancer (CLB). For legacy reasons, user requests first go through a third-party Content Delivery Network (CDN). which then routes traffic to the CLB. You have already implemented an availability Service Level Indicator (SLI) at the CLB level. However, you want to increase coverage in case of a potential load balancer misconfiguration. CDN failure, or other global networking catastrophe. Where should you measure this new SLI?

Choose 2 answers

Your team has an application built by using a Dockerfile. The build is executed from Cloud Build, and the resulting artifacts are stored in Artifact Registry. Your team is reporting that builds are slow. You need to increase build speed, while following Google-recommended practices. What should you do?

You support a user-facing web application When analyzing the application's error budget over the previous six months you notice that the application never consumed more than 5% of its error budget You hold a SLO review with business stakeholders and confirm that the SLO is set appropriately You want your application's reliability to more closely reflect its SLO What steps can you take to further that goal while balancing velocity, reliability, and business needs?

Choose 2 answers

You need to enforce several constraint templates across your Google Kubernetes Engine (GKE) clusters. The constraints include policy parameters, such as restricting the Kubernetes API. You must ensure that the policy parameters are stored in a GitHub repository and automatically applied when changes occur. What should you do?

You are running a web application deployed to a Compute Engine managed instance group Ops Agent is installed on all instances You recently noticed suspicious activity from a specific IP address You need to configure Cloud Monitoring to view the number of requests from that specific IP address with minimal operational overhead. What should you do?

You use Cloud Build to build your application. You want to reduce the build time while minimizing cost and development effort. What should you do?

You need to reduce the cost of virtual machines (VM| for your organization. After reviewing different options, you decide to leverage preemptible VM instances. Which application is suitable for preemptible VMs?

You are deploying an application that needs to access sensitive information. You need to ensure that this information is encrypted and the risk of exposure is minimal if a breach occurs. What should you do?

Your company is migrating its production systems to Google Cloud. You need to implement site reliability engineering (SRE) practices during the migration to minimize customer impact from potential future incidents. Which two SRE practices should you implement?

Choose 2 answers

Your organization wants to collect system logs that will be used to generate dashboards in Cloud Operations for their Google Cloud project. You need to configure all current and future Compute Engine instances to collect the system logs and you must ensure that the Ops Agent remains up to date. What should you do?

You support a user-facing web application. When analyzing the application’s error budget over the previous six months, you notice that the application has never consumed more than 5% of its error budget in any given time window. You hold a Service Level Objective (SLO) review with business stakeholders and confirm that the SLO is set appropriately. You want your application’s SLO to more closely reflect its observed reliability. What steps can you take to further that goal while balancing velocity, reliability, and business needs? (Choose two.)

Your Cloud Run application writes unstructured logs as text strings to Cloud Logging. You want to convert the unstructured logs to JSON-based structured logs. What should you do?

You are managing an MLOps platform on a Google Kubernetes Engine (GKE) cluster that serves two teams:

The Research team runs hundreds of small, daily experiments that can run as capacity allows and are highly cost-sensitive.

The Production team must retrain the company's main language model every Friday at 10:00 PM. This job is business-critical, cannot be interrupted, and must start on time.

You need to configure the modes for Dynamic Workload Scheduler to meet each team's requirements. What should you do?

Your organization wants to implement Site Reliability Engineering (SRE) culture and principles. Recently, a service that you support had a limited outage. A manager on another team asks you to provide a formal explanation of what happened so they can action remediations. What should you do?

You use Artifact Registry to store container images built with Cloud Build. You need to ensure that all existing and new images are continuously scanned for vulnerabilities. You also want to track who pushed each image to the registry. What should you do?

You are the Site Reliability Engineer responsible for managing your company's data services and products. You regularly navigate operational challenges, such as unpredictable data volume and high cost, with your company's data ingestion processes. You recently learned that a new data ingestion product will be developed in Google Cloud. You need to collaborate with the product development team to provide operational input on the new product. What should you do?

Your company runs an ecommerce website built with JVM-based applications and microservice architecture in Google Kubernetes Engine (GKE) The application load increases during the day and decreases during the night Your operations team has configured the application to run enough Pods to handle the evening peak load You want to automate scaling by only running enough Pods and nodes for the load What should you do?

Your team is designing a new application for deployment both inside and outside Google Cloud Platform (GCP). You need to collect detailed metrics such as system resource utilization. You want to use centralized GCP services while minimizing the amount of work required to set up this collection system. What should you do?

You are the Operations Lead for an ongoing incident with one of your services. The service usually runs at around 70% capacity. You notice that one node is returning 5xx errors for all requests. There has also been a noticeable increase in support cases from customers. You need to remove the offending node from the load balancer pool so that you can isolate and investigate the node. You want to follow Google-recommended practices to manage the incident and reduce the impact on users. What should you do?

You work for a healthcare company and regulations require you to create all resources in a United States-based region. You attempted to create a secret in Secret Manager but received the following error message:

The resource cannot be created in the global location due to an organization policy restriction.

You need to resolve the error while remaining compliant with regulations. What should you do?

Your company is using HTTPS requests to trigger a public Cloud Run-hosted service accessible at the https://booking-engine-abcdef .a.run.app URL You need to give developers the ability to test the latest revisions of the service before the service is exposed to customers What should you do?

Your company runs services by using Google Kubernetes Engine (GKE). The GKE clusters in the development environment run applications with verbose logging enabled. Developers view logs by using the kubect1 logs

command and do not use Cloud Logging. Applications do not have a uniform logging structure defined. You need to minimize the costs associated with application logging while still collecting GKE operational logs. What should you do?

You are configuring connectivity across Google Kubernetes Engine (GKE) clusters in different VPCs You notice that the nodes in Cluster A are unable to access the nodes in Cluster B You suspect that the workload access issue is due to the network configuration You need to troubleshoot the issue but do not have execute access to workloads and nodes You want to identify the layer at which the network connectivity is broken What should you do?

Your company's security team needs to have read-only access to Data Access audit logs in the _Required bucket You want to provide your security team with the necessary permissions following the principle of least privilege and Google-recommended practices. What should you do?

Your company has a Google Cloud resource hierarchy with folders for production test and development Your cyber security team needs to review your company's Google Cloud security posture to accelerate security issue identification and resolution You need to centralize the logs generated by Google Cloud services from all projects only inside your production folder to allow for alerting and near-real time analysis. What should you do?