AWS Certified Developer - Associate

Last Update Feb 28, 2026

Total Questions : 519

We are offering FREE DVA-C02 Amazon Web Services exam questions. All you do is to just go and sign up. Give your details, prepare DVA-C02 free exam questions and then go for complete pool of AWS Certified Developer - Associate test questions that will help you more.

A company is building an application to accept data from customers. The data must be encrypted at rest and in transit.

The application uses an Amazon API Gateway API that resolves to AWS Lambda functions. The Lambda functions store the data in an Amazon Aurora MySQL DB cluster. The application worked properly during testing.

A developer configured an Amazon CloudFront distribution with field-level encryption that uses an AWS Key Management Service (AWS KMS) key. After the configuration of the distribution, the application behaved unexpectedly. All the data in the database changed from plaintext to ciphertext.

The developer must ensure that the data is not stored in the database as the ciphertext from the CloudFront field-level encryption.

Which solution will meet this requirement?

A developer is building an application that uses an Amazon RDS for PostgreSQL database. To meet security requirements, the developer needs to ensure that data is encrypted at rest. The developer must be able to rotate the encryption keys on demand.

A developer is building a serverless application by using AWS Serverless Application Model (AWS SAM) on multiple AWS Lambda functions.

When the application is deployed, the developer wants to shift 10% of the traffic to the new deployment of the application for the first 10 minutes after deployment. If there are no issues, all traffic must switch over to the new version.

Which change to the AWS SAM template will meet these requirements?

A company developed an API application on AWS by using Amazon CloudFront. Amazon API Gateway, and AWS Lambda. The API has a minimum of four requests every second A developer notices that many API users run the same query by using the POST method. The developer wants to cache the POST request to optimize the API resources.

Which solution will meet these requirements'?

A company has an application that consists of different microservices that run inside an AWS account. The microservices are running in containers inside a single VPC. The number of microservices is constantly increasing. A developer must create a central logging solution for application logs.

A bookstore has an ecommerce website that stores order information in an Amazon DynamoDB table named BookOrders. The DynamoDB table contains approximately one million records.

The table uses OrderID as a partition key. There are no other indexes.

A developer wants to build a new reporting feature to retrieve all records from the table for a specified customer, based on a CustomerID property.

A company wants to share information with a third party. The third party has an HTTP API endpoint that the company can use to share the information. The company has the required API key to access the HTTP API.

The company needs a way to manage the API key by using code. The integration of the API key with the application code cannot affect application performance.

Which solution will meet these requirements MOST securely?

A developer used the AWS SDK to create an application that aggregates and produces log records for 10 services. The application delivers data to an Amazon Kinesis Data Streams stream.

Each record contains a log message with a service name, creation timestamp, and other log information. The stream has 15 shards in provisioned capacity mode. The stream uses service name as the partition key.

The developer notices that when all the services are producing logs, ProvisionedThroughputExceededException errors occur during PutRecord requests. The stream metrics show that the write capacity the applications use is below the provisioned capacity.

A company is expanding the compatibility of its photo-snaring mobile app to hundreds of additional devices with unique screen dimensions and resolutions. Photos are stored in Amazon S3 in their original format and resolution. The company uses an Amazon CloudFront distribution to serve the photos The app includes the dimension and resolution of the display as GET parameters with every request.

A developer needs to implement a solution that optimizes the photos that are served to each device to reduce load time and increase photo quality.

Which solution will meet these requirements MOST cost-effective?

A developer must use multi-factor authentication (MFA) to access data in an Amazon S3 bucket that is in another AWS account. Which AWS Security Token Service (AWS STS) API operation should the developer use with the MFA information to meet this requirement?

A developer is deploying an application on Amazon EC2 instances that run in Account A. In certain cases, this application needs to read data from a private Amazon S3 bucket in Account B. The developer must provide the application access to the S3 bucket without exposing the S3 bucket to anyone else.

Which combination of actions should the developer take to meet these requirements? (Select TWO.)

A developer creates an AWS Lambda function that is written in Java. During testing, the Lambda function does not work how the developer expected. The developer wants to use tracing capabilities to troubleshoot the problem.

Which AWS service should the developer use to accomplish this goal?

A developer is migrating an application to Amazon Elastic Kubernetes Service (Amazon EKS). The developer migrates the application to Amazon Elastic Container Registry (Amazon ECR) with an EKS cluster.

As part of the application migration to a new backend, the developer creates a new AWS account. The developer makes configuration changes to the application to point the application to the new AWS account and to use new backend resources. The developer successfully tests the changes within the application by deploying the pipeline.

The Docker image build and the pipeline deployment are successful, but the application is still connecting to the old backend. The developer finds that the application's configuration is still referencing the original EKS cluster and not referencing the new backend resources.

Which reason can explain why the application is not connecting to the new resources?

A developer is creating a new batch application that will run on an Amazon EC2 instance. The application requires read access to an Amazon S3 bucket. The developer needs to follow security best practices to grant S3 read access to the application.

Which solution meets these requirements?

A developer is creating a mobile application that will not require users to log in.

What is the MOST efficient method to grant users access to AWS resources'?

A company has a web application that is hosted on Amazon EC2 instances The EC2 instances are configured to stream logs to Amazon CloudWatch Logs The company needs to receive an Amazon Simple Notification Service (Amazon SNS) notification when the number of application error messages exceeds a defined threshold within a 5-minute period

Which solution will meet these requirements?

A developer is creating a template that uses AWS CloudFormation to deploy an application. The application is serverless and uses Amazon API Gateway, Amazon DynamoDB, and AWS Lambda.

Which AWS service or tool should the developer use to define serverless resources in YAML?

A developer is managing an application that uploads user files to an Amazon S3 bucket named companybucket. The company wants to maintain copies of all the files uploaded by users for compliance purposes, while ensuring users still have access to the data through the application.

Which IAM permissions should be applied to users to ensure they can create but not remove files from the bucket?

A company has an analytics application that uses an AWS Lambda function to process transaction data asynchronously A developer notices that asynchronous invocations of the Lambda function sometimes fail When failed Lambda function invocations occur, the developer wants to invoke a second Lambda function to handle errors and log details.

Which solution will meet these requirements?

A financial services company builds a credit card transaction processing application that uses an Amazon API Gateway HTTP API and AWS Lambda functions. The application logs all requests and request parameters to Amazon CloudWatch. The application makes the logs accessible to developer AWS accounts and a separate fraud detection AWS account by using a cross-account IAM role.

The company requires that only the fraud detection account be able to view customer credit card numbers that are associated with the transactions. Developers at the company must not be able to use the credit card numbers for testing or debugging.

The developers create the following data protection policy document snippet:

{

"Name": "data-protection-policy",

"Description": "Credit card redaction",

"Version": "2021-06-01",

"Statement": [{

"Sid": "redact-policy",

"DataIdentifier": [

"arn:aws:dataprotection::aws:data-identifier/CreditCardNumber"

],

"Operation": {

"Deidentify": {

"MaskConfig": {}

}

}

}]

}

Which combination of actions must the developers take to comply with the new policy? (Select TWO.)

A company notices that credentials that the company uses to connect to an external software as a service (SaaS) vendor are stored in a configuration file as plaintext.

The developer needs to secure the API credentials and enforce automatic credentials rotation on a quarterly basis.

Which solution will meet these requirements MOST securely?

A company is building a micro services app1 cation that consists of many AWS Lambda functions. The development team wants to use AWS Serverless Application Model (AWS SAM) templates to automatically test the Lambda functions. The development team plans to test a small percentage of traffic that is directed to new updates before the team commits to a full deployment of the application.

Which combination of steps will meet these requirements in the MOST operationally efficient way? (Select TWO.)

An application ingests data from an Amazon Kinesis data stream. The shards in the data stream are set for normal traffic.

During tests for peak traffic, the application ingests data slowly. A developer needs to adjust the data stream to handle the peak traffic.

What should the developer do to meet this requirement MOST cost-effectively?

A developer is setting up a deployment pipeline. The pipeline includes an AWS CodeBuild build stage that requires access to a database to run integration tests. The developer is using a buildspec.yml file to configure the database connection. Company policy requires automatic rotation of all database credentials.

Which solution will handle the database credentials MOST securely?

A developer has created an AWS Lambda function that consumes messages from an Amazon SQS standard queue. The developer notices that the Lambda function processes some messages multiple times.

How should developer resolve this issue MOST cost-effectively?

A developer is building an application that includes an AWS Lambda function that is written in .NET Core. The Lambda function's code needs to interact with Amazon DynamoDB tables and Amazon S3 buckets. The developer must minimize the Lambda function's deployment time and invocation duration.

Which solution will meet these requirements?

A company runs an application in a third-party cloud. The company wants to use the application to update data in AWS by using API calls to AWS services. The API calls require credentials.

The company's security policy requires the company to limit the scope and duration of any credentials used to make API calls to AWS services.

Which solution will meet these requirements in the MOST secure way?

A developer is creating an ecommerce workflow in an AWS Step Functions state machine that includes a HTTP Task state. The task passes shipping information and order details to an endpoint.

The developer needs to test the workflow to confirm that the HTTP headers and body are correct and that the responses meet expectations.

A developer deploys an AWS Lambda function that writes data to Amazon DynamoDB. Amazon CloudWatch metrics show that some Lambda invocations result in errors. However, no CloudWatch log entries exist for the function. The function source code includes logging statements before the write operation.

What should the developer do to resolve the missing CloudWatch Logs entries?

A developer has implemented an AWS Lambda function that inserts new customers into an Amazon RDS database. The function is expected to run hundreds of times each hour. The function and RDS database are in the same VPC. The function is configured to use 512 MB of RAM and is based on the following pseudocode:

def lambda_handler(event, context):

db = database.connect()

db.statement("INSERT INTO Customers (CustomerName) VALUES (%s)", event.name)

db.execute()

db.close()

After successfully testing the function multiple times, the developer notices that the execution time is longer than expected.

What should the developer do to improve performance?

A company has built an AWS Lambda function to convert large image files into output files that can be used in a third-party viewer application The company recently added a new module to the function to improve the output of the generated files However, the new module has increased the bundle size and has increased the time that is needed to deploy changes to the function code.

How can a developer increase the speed of the Lambda function deployment?

A company has an AWS Step Functions state machine named myStateMachine. The company configured a service role for Step Functions. The developer must ensure that only the myStateMachine state machine can assume the service role.

An application routinely processes a large number of Amazon S3 GET requests each second. A developer wants to increase the number of requests that the application can handle in parallel.

What should the developer do to achieve this goal?

A company is providing read access to objects in an Amazon S3 bucket for different customers. The company uses 1AM permissions to restrict access to the S3 bucket. The customers can access only their own files.

Due to a regulation requirement, the company needs to enforce encryption in transit for interactions with Amazon S3.

Which solution will meet these requirements?

A company's development team uses an SSH key pair to copy files among a large fleet of development servers. The SSH key pair has been compromised. A developer has generated a replacement key pair. The company has deployed the AWS Systems Manager Agent (SSM Agent) and the Amazon CloudWatch agent on all of the development servers.

The developer needs a solution to distribute the new key to all the Linux servers.

Which solution will meet these requirements in the MOST operationally efficient way?

A company is creating an application that processes csv files from Amazon S3 A developer has created an S3 bucket The developer has also created an AWS Lambda function to process the csv files from the S3 bucket

Which combination of steps will invoke the Lambda function when a csv file is uploaded to Amazon S3? (Select TWO.)

A developer is writing a serverless application that requires an AWS Lambda function to be invoked every 10 minutes.

What is an automated and serverless way to invoke the function?

A company stores its data in data tables in a series of Amazon S3 buckets. The company received an alert that customer credit card information might have been exposed in a data table on one of the company's public applications. A developer needs to identify all potential exposures within the application environment.

Which solution will meet these requirements?

A developer has created an AWS Lambda function that is written in Python. The Lambda function reads data from objects in Amazon S3 and writes data to an Amazon DynamoDB table.

The function is successfully invoked from an S3 event notification when an object is created. However, the function fails when it attempts to write to the DynamoDB table.

What is the MOST likely cause of this issue?

A company regularly receives route status updates from its delivery trucks as events in Amazon EventBridge. The company is building an API-based application in a VPC that will consume and process the events to create a delivery status dashboard. The API application must not be available by using public IP addresses because of security and compliance requirements.

How should the company send events from EventBridge to the API application?

An developer is building a serverless application by using the AWS Serverless Application Model (AWS SAM). The developer is currently testing the application in a development environment. When the application is nearly finsihed, the developer will need to set up additional testing and staging environments for a quality assurance team.

The developer wants to use a feature of the AWS SAM to set up deployments to multiple environments.

Which solution will meet these requirements with the LEAST development effort?

A developer is building a microservice that uses AWS Lambda to process messages from an Amazon Simple Queue Service (Amazon SQS) standard queue. The Lambda function calls external APIs to enrich the SOS message data before loading the data into an Amazon Redshift data warehouse. The SOS queue must handle a maximum of 1.000 messages per second.

During initial testing, the Lambda function repeatedly inserted duplicate data into the Amazon Redshift table. The duplicate data led to a problem with data analysis. All duplicate messages were submitted to the queue within 1 minute of each other.

How should the developer resolve this issue?

A developer needs to write an AWS CloudFormation template on a local machine and deploy a CloudFormation stack to AWS.

What must the developer do to complete these tasks?

A company has implemented AWS CodeDeploy as part of its CI/CD pipeline. The company uses automatic rollbacks during an in-place deployment of a new version of a web application on Amazon EC2 instances.

What happens if the deployment of the new version fails validation?

A developer needs to retrieve all data from an Amazon DynamoDB table that matches a particular partition key.

Which solutions will meet this requirement in the MOST operationally efficient way? (Select TWO.)

A company's application runs on a fleet of Amazon EC2 instances in a VPC within private subnets that do not have public internet access. The company uses Amazon CloudWatch to monitor the application.

A developer is troubleshooting an issue with the application. Some performance metrics are not being published to CloudWatch. The developer uses EC2 Instance Connect to access an EC2 instance. The developer verifies that a CloudWatch agent is pre-installed and running.

The developer needs to ensure that the performance metrics are published to CloudWatch.

Which solution will meet this requirement in the MOST secure way?

A company wants to migrate applications from its on-premises servers to AWS. As a first step, the company is modifying and migrating a non-critical application to a single Amazon EC2 instance. The application will store information in an Amazon S3 bucket. The company needs to follow security best practices when deploying the application on AWS.

Which approach should the company take to allow the application to interact with Amazon S3?

An application writes transactions to an Amazon DynamoDB table by using the PutItem operation. Each transaction has a unique transactionId. Sometimes duplicate transactions are received. The developer wants to ensure that a duplicate PutItem does not overwrite an existing item. Duplicate transactions are rare.

What is the MOST cost-effective solution?

A developer is creating an AWS CloudFormation template to deploy Amazon EC2 instances across multiple AWS accounts. The developer must choose the EC2 instances from a list of approved instance types.

How can the developer incorporate the list of approved instance types in the CloudFormation template?

A developer needs to store configuration variables for an application. The developer needs to set an expiration date and time for me configuration. The developer wants to receive notifications. Before the configuration expires. Which solution will meet these requirements with the LEAST operational overhead?

A company is planning to securely manage one-time fixed license keys in AWS. The company's development team needs to access the license keys in automaton scripts that run in Amazon EC2 instances and in AWS CloudFormation stacks.

Which solution will meet these requirements MOST cost-effectively?

An application uses AWS X-Ray to generate a large amount of trace data on an hourly basis. A developer wants to use filter expressions to limit the returned results through user-specified custom attributes.

How should the developer use filter expressions to filter the results in X-Ray?

A developer has written a distributed application that uses micro services. The microservices are running on Amazon EC2 instances. Because of message volume, the developer is unable to match log output from each microservice to a specific transaction. The developer needs to analyze the message flow to debug the application.

Which combination of steps should the developer take to meet this requirement? (Select TWO.)

A company is building a web application on AWS. When a customer sends a request, the application will generate reports and then make the reports available to the customer within one hour. Reports should be accessible to the customer for 8 hours. Some reports are larger than 1 MB. Each report is unique to the customer. The application should delete all reports that are older than 2 days.

Which solution will meet these requirements with the LEAST operational overhead?

A company wants to ensure that only one user from its Admin group has the permanent right to delete an Amazon EC2 resource. The company must not modify the existing Admin group policy.

What should a developer use to meet these requirements?

A company has an application that processes audio files for different departments. When audio files are saved to an Amazon S3 bucket, an AWS Lambda function receives an event notification and processes the audio input.

A developer needs to update the solution so that the application can process the audio files for each department independently. The application must publish the audio file location for each department to each department's existing Amazon SQS queue.

Which solution will meet these requirements with no changes to the Lambda function code?

A company has a web application that contains an Amazon API Gateway REST API. A developer has created an AWS CloudFormation template for the initial deployment of the application. The developer has deployed the application successfully as part of an AWS CodePipeline CI/CD process. All resources and methods are available through the deployed stage endpoint.

The CloudFormation template contains the following resource types:

• AWS::ApiGateway::RestApi

• AWS::ApiGateway::Resource

• AWS::ApiGateway::Method

• AWS::ApiGateway::Stage

• AWS::ApiGateway::Deployment

The developer adds a new resource to the REST API with additional methods and redeploys the template. CloudFormation reports that the deployment is successful and that the stack is in the UPDATE_COMPLETE state. However, calls to all new methods are returning 404 (Not Found) errors.

What should the developer do to make the new methods available?

A developer is building a multi-tenant application that uses an AWS Lambda function and an Amazon S3 bucket. An S3 event notification invokes the Lambda function when a new file is uploaded to the S3 bucket. The function reads each new file from the S3 bucket, processes the file, and writes data to an Amazon DynamoDB table. Each file in the S3 bucket has a prefix that corresponds with the name of the tenant that owns the file. Items in the DynamoDB table use tenant name as the partition key.

The developer must reduce the risk that file data will leak across tenants during processing.

Which combination of actions will meet this requirement? (Select THREE.)

Users of a web-based music application are experiencing latency issues on one of the application's most popular pages. A developer identifies that the issue is caused by the slow load time of specific widgets that rank and sort various songs and albums.

The developer needs to ensure that the widgets load more quickly by using built-in, in-memory ranking and sorting techniques. The developer must ensure that the data remains up to date.

Which solution will meet these requirements with the LEAST latency?

A healthcare company is developing a multi-tier web application to manage patient records that are in an Amazon Aurora PostgreSQL database cluster. The company stores the application code in a Git repository and deploys the code to Amazon EC2 instances.

The application must comply with security policies and follow the principle of least privilege. The company must securely manage database credentials and API keys within the application code. The company must have the ability to rotate encryption keys on demand.

Which solution will meet these requirements?

A developer is building a three-tier web application that should be able to handle a minimum of 5000 requests per minute. Requirements state that the web tier should be completely stateless while the application maintains session state for the users.

How can session data be externalized, keeping latency at the LOWEST possible value?

A developer registered an AWS Lambda function as a target for an Application Load Balancer (ALB) using a CLI command. However, the Lambda function is not being invoked when the client sends requests through the ALB.

Why is the Lambda function not being invoked?

A company is building an ecommerce application. The company stores the application's static content in an Amazon S3 bucket. The application stores data that includes personally identifiable information (PII). The application makes dynamic requests in JSON format through an Amazon CloudFront distribution to an Amazon API Gateway REST API. The REST API invokes an AWS Lambda function that stores and queries data in Amazon DynamoDB.

The company must ensure that all PII data is encrypted at rest in DynamoDB. The company must also protect specific data fields more granularly. The company must ensure that the specified fields are encrypted at the edge. The specified fields must remain encrypted throughout the full stack of the application.

Which solution will meet these requirements?

A developer uses AWS CloudFormation to deploy an Amazon API Gateway API and an AWS Step Functions state machine The state machine must reference the API Gateway API after the CloudFormation template is deployed The developer needs a solution that uses the state machine to reference the API Gateway endpoint.

Which solution will meet these requirements MOST cost-effectively?

A company has an application that uses Amazon Cognito user pools as an identity provider. The company must secure access to user records. The company has set up multi-factor authentication (MFA). The company also wants to send a login activity notification by email every time a user logs in.

What is the MOST operationally efficient solution that meets this requirement?

A company is working on a new serverless application. A developer needs to find an automated way to deploy AWS Lambda functions and the dependent Infrastructure with minimum coding effort. The application also needs to be reliable.

Which method will meet these requirements with the LEAST operational overhead?

A developer is building a video search application. Video files average 2.5 TB in size. Files must have instant access for the first 90 days. After 90 days, files can take more than 10 minutes to load.

Which solution will meet these requirements in the MOST cost-effective way?

A developer is building an ecommerce application that uses AWS Lambda functions. Each Lambda function performs a specific step in a customer order workflow, such as order processing and inventory management. The developer must ensure that the Lambda functions run in a specific order.

Which solution will meet this requirement with the LEAST operational overhead?

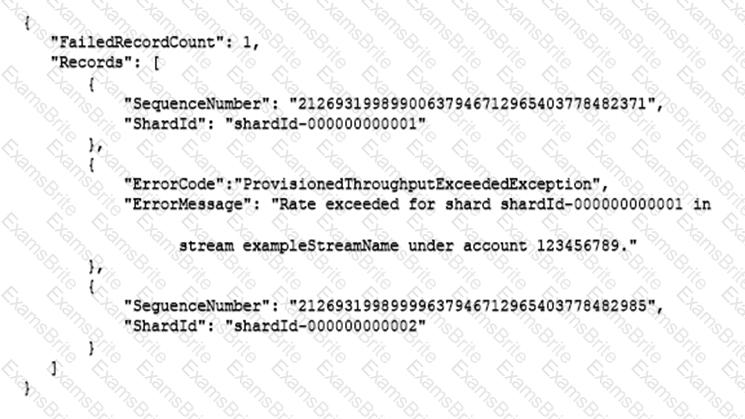

An application is processing clickstream data using Amazon Kinesis. The clickstream data feed into Kinesis experiences periodic spikes. The PutRecords API call occasionally fails and the logs show that the failed call returns the response shown below:

Which techniques will help mitigate this exception? (Choose two.)

A large company has its application components distributed across multiple AWS accounts. The company needs to collect and visualize trace data across these accounts.

What should be used to meet these requirements?

A developer has created a new IAM user that has the s3:PutObject permission to write to a specific Amazon S3 bucket. The S3 bucket uses server-side encryption with AWS KMS–managed keys (SSE-KMS) as the default encryption. When an application uses the access key and secret key of the IAM user to call the PutObject API operation, the application receives an Access Denied error.

What should the developer do to resolve this error?

A company has three AWS Lambda functions written in Node.js. The Lambda functions include a mix of custom code and open source modules. When bugs are occasionally detected in the open source modules, all three Lambda functions must be patched.

What is the MOST operationally efficient solution to deploy a patched open source library for all three Lambda functions?

A company is preparing to migrate an application to the company's first AWS environment Before this migration, a developer is creating a proof-of-concept application to validate a model for building and deploying container-based applications on AWS.

Which combination of steps should the developer take to deploy the containerized proof-of-concept application with the LEAST operational effort? (Select TWO.)

An organization is using Amazon CloudFront to ensure that its users experience low-latency access to its web application. The organization has identified a need to encrypt all traffic between users and CloudFront, and all traffic between CloudFront and the web application.

How can these requirements be met? (Select TWO)

A company hosts its application on AWS. The application runs on an Amazon Elastic Container Service (Amazon ECS) cluster that uses AWS Fargate. The cluster runs behind an Application Load Balancer The application stores data in an Amazon Aurora database A developer encrypts and manages database credentials inside the application

The company wants to use a more secure credential storage method and implement periodic credential rotation.

Which solution will meet these requirements with the LEAST operational overhead?

A developer is troubleshooting a three-tier application, which is deployed on Amazon EC2 instances. There is a connectivity problem between the application servers and the database servers.

Which AWS services or tools should be used to identify the faulty component? (Select TWO.)

A company has point-of-sale devices across thousands of retail shops that synchronize sales transactions with a centralized system. The system includes an Amazon API Gateway API that exposes an AWS Lambda function. The Lambda function processes the transactions and stores the transactions in Amazon RDS for MySQL. The number of transactions increases rapidly during the day and is near zero at night.

How can a developer increase the elasticity of the system MOST cost-effectively?

A company operates on-premises data centers that run an image processing service composed of containerized applications on Kubernetes clusters. All applications share access to a common NFS file system for data storage.

The company is running out of NFS capacity and must migrate to AWS quickly. The Kubernetes clusters must be highly available on AWS.

Which combination of actions will meet these requirements? (Select TWO.)

A company needs to distribute firmware updates to its customers around the world.

Which service will allow easy and secure control of the access to the downloads at the lowest cost?

A team of developed is using an AWS CodePipeline pipeline as a continuous integration and continuous delivery (CI/CD) mechanism for a web application. A developer has written unit tests to programmatically test the functionality of the application code. The unit tests produce a test report that shows the results of each individual check. The developer now wants to run these tests automatically during the CI/CD process.

A developer is updating an Amazon API Gateway REST API to have a mock endpoint. The developer wants to update the integration request mapping template so the endpoint will respond to mock integration requests with specific HTTP status codes based on various conditions.

A company uses AWS Secrets Manager to store API keys for external REST services. The company uses an AWS Lambda function to rotate the API keys on a regular schedule.

Due to an error in the Lambda function, the API keys are successfully updated in AWS Secrets Manager but are not updated in the external REST services. Before investigating the root cause of the issue, the company wants to resume requests to the external REST services as quickly as possible.

Which solution will meet this requirement with the LEAST operational overhead?

A company runs an application on AWS The application stores data in an Amazon DynamoDB table Some queries are taking a long time to run These slow queries involve an attribute that is not the table's partition key or sort key

The amount of data that the application stores in the DynamoDB table is expected to increase significantly. A developer must increase the performance of the queries.

Which solution will meet these requirements'?

A company has an application that is deployed on AWS Elastic Beanstalk. The application generates user-specific PDFs and stores the PDFs in an Amazon S3 bucket. The application then uses Amazon Simple Email Service (Amazon SES) to send the PDFs by email to subscribers.

Users no longer access the PDFs 90 days after the PDFs are generated. The S3 bucket is not versioned and contains many obsolete PDFs.

A developer must reduce the number of files in the S3 bucket by removing PDFs that are older than 90 days.

Which solution will meet this requirement with the LEAST development effort?

An Amazon Kinesis Data Firehose delivery stream is receiving customer data that contains personally identifiable information. A developer needs to remove pattern-based customer identifiers from the data and store the modified data in an Amazon S3 bucket.

What should the developer do to meet these requirements?

A developer has an application that makes batch requests directly to Amazon DynamoDB by using the BatchGetItem low-level API operation. The responses frequently return values in the UnprocessedKeys element.

Which actions should the developer take to increase the resiliency of the application when the batch response includes values in UnprocessedKeys? (Choose two.)

A company had an Amazon RDS for MySQL DB instance that was named mysql-db. The DB instance was deleted within the past 90 days.

A developer needs to find which IAM user or role deleted the DB instance in the AWS environment.

Which solution will provide this information?

A company's application uses an Amazon API Gateway REST API and AWS Lambda functions to upload media files to and fetch media files from a standard Amazon S3 Standard bucket. The company runs a nightly job on an Amazon EC2 instance to create dashboards and other visualizations for application users. The job usually runs for 1 to 2 hours.

A developer observes request throttling while the function is running. The application generates multiple 429 exceptions in the Lambda function logs when files do not process successfully. The developer needs to resolve the issue and ensure that all of the application ingests all files.

Which solution will meet these requirements?

A company is creating a new application that gives users the ability to upload and share short video files. The average size of the video files is 10 MB. After a user uploads a file, a message needs to be placed into an Amazon Simple Queue Service (Amazon SQS) queue so the file can be processed. The files need to be accessible for processing within 5 minutes.

Which solution will meet these requirements MOST cost-effectively?

A developer creates a static website for their department The developer deploys the static assets for the website to an Amazon S3 bucket and serves the assets with Amazon CloudFront The developer uses origin access control (OAC) on the CloudFront distribution to access the S3 bucket

The developer notices users can access the root URL and specific pages but cannot access directories without specifying a file name. For example, /products/index.html works, but /products returns an error The developer needs to enable accessing directories without specifying a file name without exposing the S3 bucket publicly.

Which solution will meet these requirements'?

A data visualization company wants to strengthen the security of its core applications The applications are deployed on AWS across its development staging, pre-production, and production environments. The company needs to encrypt all of its stored sensitive credentials The sensitive credentials need to be automatically rotated Aversion of the sensitive credentials need to be stored for each environment

Which solution will meet these requirements in the MOST operationally efficient way?

A developer is integrating Amazon ElastiCache in an application. The cache will store data from a database. The cached data must populate real-time dashboards. Which caching strategy will meet these requirements?

A developer is making changes to a custom application that uses AWS Elastic Beanstalk.

Which solutions will update the Elastic Beanstalk environment with the new application version after the developer completes the changes? (Select TWO.)

A company built a new application in the AWS Cloud. The company automated the bootstrapping of new resources with an Auto Scaling group by using AWS Cloudf-ormation templates. The bootstrap scripts contain sensitive data.

The company needs a solution that is integrated with CloudFormation to manage the sensitive data in the bootstrap scripts.

Which solution will meet these requirements in the MOST secure way?

A software company is migrating a single-page application from on-premises servers to the AWS Cloud by using AWS Amplify Hosting. The application relies on an API that was created with an existing GraphQL schema. The company needs to migrate the API along with the application.

Which solution will meet this requirement with the LEAST amount of configuration?

A developer is building an application that processes a stream of user-supplied data. The data stream must be consumed by multiple Amazon EC2 based processing applications in parallel and in real time. Each processor must be able to resume without losing data if there is a service interruption. The application architect plans to add other processors in the near future, and wants to minimize the amount of data duplication involved.

Which solution will satisfy these requirements?

A company hosts a batch processing application on AWS Elastic Beanstalk with instances that run the most recent version of Amazon Linux. The application sorts and processes large datasets. In recent weeks, the application's performance has decreased significantly during a peak period for traffic. A developer suspects that the application issues are related to the memory usage. The developer checks the Elastic Beanstalk console and notices that memory usage is not being tracked.

How should the developer gather more information about the application performance issues?

A company is developing a serverless application that requires storage of sensitive API keys as environment variables for various services. The application requires the automatic rotation of the encryption keys every year.

Which solution will meet these requirements with no development effort?

A web application is using Amazon Kinesis Data Streams for clickstream data that may not be consumed for up to 12 hours.

How can the developer implement encryption at rest for data within the Kinesis Data Streams?

A company needs to package and deploy an application that uses AWS Lambda to compress and decompress video clips. The application uses a video codec library that is larger than 250 MB. The application uses the library to compress the videos before storage and to decompress the videos upon retrieval.

A developer is building an application that stores user activity data in an Amazon DynamoDB table. The developer is writing a new AWS Lambda function to process the activity data. The developer grants the IAM role that the function assumes permission to query the table. The developer must ensure that the function does not have access to personally identifiable information (PII) that is stored in some of the table item attributes.

Which solutions will meet these requirements? (Select TWO.)

A company runs a highly available application in multiple AWS Regions. The application requires access to a secret value that is stored in AWS Secrets Manager. The secret value must be available in all Regions where the application operates. The secret value must remain consistent across the Regions.

Which solution will meet these requirements with the LEAST operational overhead?

A developer is working on an ecommerce application that stores data in an Amazon RDS for MySQL cluster The developer needs to implement a caching layer for the application to retrieve information about the most viewed products.

Which solution will meet these requirements?

A developer is writing an AWS Lambda function. The developer wants to log key events that occur while the Lambda function runs. The developer wants to include a unique identifier to associate the events with a specific function invocation. The developer adds the following code to the Lambda function:

Which solution will meet this requirement?

A developer is creating an AWS Lambda function. The Lambda function needs an external library to connect to a third-party solution The external library is a collection of files with a total size of 100 MB The developer needs to make the external library available to the Lambda execution environment and reduce the Lambda package space

Which solution will meet these requirements with the LEAST operational overhead?

A developer has deployed an AWS Lambda function that is subscribed to an Amazon Simple Notification Service {Amazon SNS) topic. The developer must implement a solution to add a record of each Lambda function invocation to an Amazon Simple Queue Service {Amazon SQS) queue.

Which solution will meet this requirement?

A developer needs to use Amazon DynamoDB to store customer orders. The developer's company requires all customer data to be encrypted at rest with a key that the company generates.

What should the developer do to meet these requirements?

A developer works for a company that only has a single pre-production AWS account with an AWS CloudFormation AWS SAM stack. The developer made changes to an existing AWS Lambda function specified in the AWS SAM template and additional Amazon SNS topics.

The developer wants to do a one-time deployment of the changes to test if the changes are working. The developer does not want to impact the existing pre-production application that is currently being used by other team members as part of the release pipeline.

Which solution will meet these requirements?

A company uses a custom root certificate authority certificate chain (Root CA Cert) that is 10 KB in size generate SSL certificates for its on-premises HTTPS endpoints. One of the company’s cloud based applications has hundreds of AWS Lambda functions that pull date from these endpoints. A developer updated the trust store of the Lambda execution environment to use the Root CA Cert when the Lambda execution environment is initialized. The developer bundled the Root CA Cert as a text file in the Lambdas deployment bundle.

After 3 months of development the root CA Cert is no longer valid and must be updated. The developer needs a more efficient solution to update the Root CA Cert for all deployed Lambda functions. The solution must not include rebuilding or updating all Lambda functions that use the Root CA Cert. The solution must also work for all development, testing and production environment. Each environment is managed in a separate AWS account.

When combination of steps Would the developer take to meet these environments MOST cost-effectively? (Select TWO)

A developer warns to add request validation to a production environment Amazon API Gateway API. The developer needs to test the changes before the API is deployed to the production environment. For the lest the developer will send test requests to the API through a testing tool.

Which solution will meet these requirements with the LEAST operational overhead?

A developer is writing an application that will retrieve sensitive data from a third-party system. The application will format the data into a PDF file. The PDF file could be more than 1 MB. The application will encrypt the data to disk by using AWS Key Management Service (AWS KMS). The application will decrypt the file when a user requests to download it. The retrieval and formatting portions of the application are complete.

The developer needs to use the GenerateDataKey API to encrypt the PDF file so that the PDF file can be decrypted later. The developer needs to use an AWS KMS symmetric customer managed key for encryption.

Which solutions will meet these requirements?

A developer has been asked to create an AWS Lambda function that is invoked any time updates are made to items in an Amazon DynamoDB table. The function has been created and appropriate permissions have been added to the Lambda execution role Amazon DynamoDB streams have been enabled for the table, but the function 15 still not being invoked.

Which option would enable DynamoDB table updates to invoke the Lambda function?

A developer is writing an application that will provide data files to an external company. The external company needs to verify that the data is not modified in transit.

How can the developer use AWS KMS to prove the integrity of the transferred data?

A developer is creating a mobile app that calls a backend service by using an Amazon API Gateway REST API. For integration testing during the development phase, the developer wants to simulate different backend responses without invoking the backend service.

Which solution will meet these requirements with the LEAST operational overhead?

A development team uses an Amazon DynamoDB table as a database for an application. The team notices errors and slowdowns in the application during peak usage hours. The slowdowns and errors occur during a surge of user logins. The application receives frequent write requests. Application logs indicate that write requests are being throttled.

The development team needs to reduce the application latency and resolve the throttling errors.

Which solutions will meet these requirements? (Select TWO.)

A company is creating a new feature for existing software. Before the company fully releases a new version of the software, the company wants to test the feature.

The company needs to gather feedback about the feature from a small group of users while the current software version remains deployed. If the testing validates the feature, the company needs to deploy the new software version to all other users at the same time.

Which deployment strategy will meet these requirements?

A developer is receiving HTTP 400: ThrottlingException errors intermittently when calling the Amazon CloudWatch API. When a call fails, no data is retrieved.

What best practice should first be applied to address this issue?

An ecommerce company manages its application's infrastructure by using AWS Elastic Beanstalk. A developer wants to deploy the new version of the application with the least possible application downtime. The developer also must minimize the application's rollback time if there are issues with the deployment.

Which approach will meet these requirements?

A developer has created an AWS Lambda function to provide notification through Amazon Simple Notification Service (Amazon SNS) whenever a file is uploaded to Amazon S3 that is larger than 50 MB. The developer has deployed and tested the Lambda function by using the CLI. However, when the event notification is added to the S3 bucket and a 3.000 MB file is uploaded, the Lambda function does not launch.

Which of the following Is a possible reason for the Lambda function's inability to launch?

A developer is using AWS Step Functions to automate a workflow The workflow defines each step as an AWS Lambda function task The developer notices that runs of the Step Functions state machine fail in the GetResource task with either an UlegalArgumentException error or a TooManyRequestsException error

The developer wants the state machine to stop running when the state machine encounters an UlegalArgumentException error. The state machine needs to retry the GetResource task one additional time after 10 seconds if the state machine encounters a TooManyRequestsException error. If the second attempt fails, the developer wants the state machine to stop running.

How can the developer implement the Lambda retry functionality without adding unnecessary complexity to the state machine'?

A developer is setting up infrastructure by using AWS Cloud Formation. If an error occurs when the resources described in the CloudFormation template are provisioned, successfully provisioned resources must be preserved. The developer must provision and update the CloudFormation stack by using the AWS CLI.

Which solution will meet these requirements?

A company has a website that displays a daily newsletter. When a user visits the website, an AWS Lambda function processes the browser's request and queries the company's on-premises database to obtain the current newsletter. The newsletters are stored in English. The Lambda function uses the Amazon Translate TranslateText API operation to translate the newsletters, and the translation is displayed to the user.

Due to an increase in popularity, the website's response time has slowed. The database is overloaded. The company cannot change the database and needs a solution that improves the response time of the Lambda function.

Which solution meets these requirements?

A company is developing a web application that allows its employees to upload a profile picture to a private Amazon S3 bucket. There is no size limit for the profile pictures, which should be displayed every time an employee logs in. For security reasons, the pictures cannot be publicly accessible.

What is a viable long-term solution for this scenario?

A developer is working on an application that will store protected health information (PHI) in an Amazon RDS database. The developer applies encryption to the database. The developer must also encrypt the PHI data separately to prevent administrators from accessing the data. Because some of the PHI data files are large, the developer must encrypt the PHI data in the application locally before saving the data to the database.

Which solution will meet these requirements in the MOST secure way?

A developer maintains a serverless application that uses AWS Lambda to process financial transaction files that have been uploaded to an Amazon S3 bucket. The developer has scheduled a Lambda function to run once each hour to process the files.

Over time, the frequency of file uploads to the S3 bucket increases. The increasing number of file uploads occasionally causes the Lambda function to exceed its 15-minute execution timeout.

The developer needs to modify the application's architecture to handle increased file uploads and decrease file processing time. The developer must also ensure that the application does not duplicate the processing of files.

Which solution will meet these requirements?

A developer has designed an application to store incoming data as JSON files in Amazon S3 objects. Custom business logic in an AWS Lambda function then transforms the objects, and the Lambda function loads the data into an Amazon DynamoDB table. Recently, the workload has experienced sudden and significant changes in traffic. The flow of data to the DynamoDB table is becoming throttled.

The developer needs to implement a solution to eliminate the throttling and load the data into the DynamoDB table more consistently.

Which solution will meet these requirements?

A developer is building an image-processing application that includes an AWS Lambda function. The Lambda function moves images from one AWS service to another AWS service for image processing. For images that are larger than 2 MB, the Lambda function returns the following error: "Task timed out after 3.01 seconds."

The developer needs to resolve the error without modifying the Lambda function code.

Which solution will meet these requirements?

A company hosts a client-side web application for one of its subsidiaries on Amazon S3. The web application can be accessed through Amazon CloudFront from https://www.example.com. After a successful rollout, the company wants to host three more client-side web applications for its remaining subsidiaries on three separate S3 buckets.

To achieve this goal, a developer moves all the common JavaScript files and web fonts to a central S3 bucket that serves the web applications. However, during testing, the developer notices that the browser blocks the JavaScript files and web fonts.

What should the developer do to prevent the browser from blocking the JavaScript files and web fonts?

A company is migrating an on-premises database to Amazon RDS for MySQL. The company has read-heavy workloads. The company wants to refactor the code to achieve optimum read performance for queries.

Which solution will meet this requirement with LEAST current and future effort?

A developer is migrating a containerized application from an on-premises environment to the AWS Cloud. The developer is using the AWS CDK to provision a container in Amazon ECS on AWS Fargate. The container is behind an Application Load Balancer (ALB).

When the developer deploys the stack, the deployment fails because the ALB fails health checks. The developer needs to resolve the failed health checks.

Which solutions will meet this requirement? (Select TWO.)

A developer is incorporating AWS X-Ray into an application that handles personal identifiable information (PII). The application is hosted on Amazon EC2 instances. The application trace messages include encrypted PII and go to Amazon CloudWatch. The developer needs to ensure that no PII goes outside of the EC2 instances.

Which solution will meet these requirements?

A company is planning to use AWS CodeDeploy to deploy an application to Amazon Elastic Container Service (Amazon ECS) During the deployment of a new version of the application, the company initially must expose only 10% of live traffic to the new version of the deployed application. Then, after 15 minutes elapse, the company must route all the remaining live traffic to the new version of the deployed application.

Which CodeDeploy predefined configuration will meet these requirements?

A developer is configuring an applications deployment environment in AWS CodePipeine. The application code is stored in a GitHub repository. The developer wants to ensure that the repository package's unit tests run in the new deployment environment. The deployment has already set the pipeline's source provider to GitHub and has specified the repository and branch to use in the deployment.

When combination of steps should the developer take next to meet these requirements with the least the LEAST overhead' (Select TWO).

A company has many microservices that are comprised of AWS Lambda functions. Multiple teams within the company split ownership of the microservices.

An application reads configuration values from environment variables that are contained in the Lambda functions. During a security audit, the company discovers that some of the environment variables contain sensitive information.

The company's security policy requires each team to have full control over the rotation of AWS KMS keys that the team uses for its respective microservices.

A developer is creating an AWS Lambda function that consumes messages from an Amazon Simple Queue Service (Amazon SQS) standard queue. The developer notices that the Lambda function processes some messages multiple times.

How should developer resolve this issue MOST cost-effectively?

A developer is building a highly secure healthcare application using serverless components. This application requires writing temporary data to /Imp storage on an AWS Lambda function.

How should the developer encrypt this data?

An application reads data from an Amazon Aurora global database with clusters in two AWS Regions. Database credentials are stored in AWS Secrets Manager in the primary Region and are rotated regularly.

The application must be able to run in both Regions and remain highly available.

Which combination of actions will meet these requirements? (Select TWO.)

A developer is building an application that needs to store an API key. An AWS Lambda function needs to use the API key. The developer's company requires secrets to be encrypted at rest by an AWS KMS key. The company must control key rotation.

Which solutions will meet these requirements? (Select TWO.)

A developer is building an application that gives users the ability to view bank account from multiple sources in a single dashboard. The developer has automated the process to retrieve API credentials for these sources. The process invokes an AWS Lambda function that is associated with an AWS CloudFormation cotton resource.

The developer wants a solution that will store the API credentials with minimal operational overhead.

When solution will meet these requirements?

A developer is building a multi-tenant application using AWS Lambda, Amazon S3, and Amazon DynamoDB. Each S3 object prefix represents a tenant name, and DynamoDB uses the tenant name as the partition key.

The developer must prevent cross-tenant data access during processing.

Which combination of actions will meet this requirement? (Select THREE.)

A company has an Amazon S3 bucket that contains sensitive data. The data must be encrypted in transit and at rest. The company encrypts the data in the S3 bucket by using an AWS Key Management Service (AWS KMS) key. A developer needs to grant several other AWS accounts the permission to use the S3 GetObject operation to retrieve the data from the S3 bucket.

How can the developer enforce that all requests to retrieve the data provide encryption in transit?

A developer is storing sensitive data generated by an application in Amazon S3. The developer wants to encrypt the data at rest. A company policy requires an audit trail of when the AWS Key Management Service (AWS KMS) key was used and by whom.

Which encryption option will meet these requirements?

A company hosts its application in the us-west-1 Region. The company wants to add redundancy in the us-east-1 Region. The application secrets are stored in AWS Secrets Manager in us-west-1. A developer needs to replicate the secrets to us-east-1.

Which solution will meet this requirement?

A developer needs to deploy an application running on AWS Fargate using Amazon ECS The application has environment variables that must be passed to a container for the application to initialize.

How should the environment variables be passed to the container?

A developer is working on an app for a company that uses an Amazon DynamoDB table named Orders to store customer orders. The table uses OrderlD as the partition key and there is no sort key. The table contains more than 100,000 records. The developer needs to add a functionality that will retrieve all Orders records that contain an OrderSource attribute with the MobileApp value.

Which solution will improve the user experience in the MOST efficient way?

A company is using an Amazon API Gateway REST API endpoint as a webhook to publish events from an on-premises source control management (SCM) system to Amazon EventBridge. The company has configured an EventBridge rule to listen for the events and to control application deployment in a central AWS account. The company needs to receive the same events across multiple receiver AWS accounts.

How can a developer meet these requirements without changing the configuration of the SCM system?

A company is using Amazon RDS as the Backend database for its application. After a recent marketing campaign, a surge of read requests to the database increased the latency of data retrieval from the database.

The company has decided to implement a caching layer in front of the database. The cached content must be encrypted and must be highly available.

Which solution will meet these requirements?

A company has an ecommerce application. To track product reviews, the company's development team uses an Amazon DynamoDB table.

Every record includes the following

• A Review ID a 16-digrt universally unique identifier (UUID)

• A Product ID and User ID 16 digit UUlDs that reference other tables

• A Product Rating on a scale of 1-5

• An optional comment from the user

The table partition key is the Review ID. The most performed query against the table is to find the 10 reviews with the highest rating for a given product.

Which index will provide the FASTEST response for this query"?

A developer is building a web application that uses Amazon API Gateway to expose an AWS Lambda function to process requests from clients. During testing, the developer notices that the API Gateway times out even though the Lambda function finishes under the set time limit.

Which of the following API Gateway metrics in Amazon CloudWatch can help the developer troubleshoot the issue? (Choose two.)

A developer is creating an application that uses an AWS Lambda function to transform and load data from an Amazon S3 bucket. When the developer tests the application, the developer finds that some invocations of the Lambda function are slower than others.

The developer needs to update the Lambda function to have predictable invocation durations that run with low latency. Any initialization activities, such as loading libraries and instantiating clients, must run during allocation time rather than during actual function invocations.

Which combination of steps will meet these requirements? (Select TWO.)

A company has many microservices that are comprised of AWS Lambda functions. Multiple teams within the company split ownership of the microservices.

An application reads configuration values from environment variables that are contained in the Lambda functions. During a security audit, the company discovers that some of the environment variables contain sensitive information.

The company's security policy requires each team to have full control over the rotation of AWS KMS keys that the team uses for its respective microservices.

Which solution will meet these requirements?

For a deployment using AWS Code Deploy, what is the run order of the hooks for in-place deployments?

A developer is working on an ecommerce website The developer wants to review server logs without logging in to each of the application servers individually. The website runs on multiple Amazon EC2 instances, is written in Python, and needs to be highly available

How can the developer update the application to meet these requirements with MINIMUM changes?

A company is migrating legacy internal applications to AWS. Leadership wants to rewrite the internal employee directory to use native AWS services. A developer needs to create a solution for storing employee contact details and high-resolution photos for use with the new application.

Which solution will enable the search and retrieval of each employee's individual details and high-resolution photos using AWS APIs?

A company is building an application for stock trading. The application needs sub-millisecond latency for processing trade requests. The company uses Amazon DynamoDB to store all the trading data that is used to process each trading request A development team performs load testing on the application and finds that the data retrieval time is higher than expected. The development team needs a solution that reduces the data retrieval time with the least possible effort.

Which solution meets these requirements'?