SnowPro Advanced: Architect Certification Exam

Last Update Feb 28, 2026

Total Questions : 182

We are offering FREE ARA-C01 Snowflake exam questions. All you do is to just go and sign up. Give your details, prepare ARA-C01 free exam questions and then go for complete pool of SnowPro Advanced: Architect Certification Exam test questions that will help you more.

What integration object should be used to place restrictions on where data may be exported?

A.

Stage integration

B.

Security integration

C.

Storage integration

D.

API integration

A company is following the Data Mesh principles, including domain separation, and chose one Snowflake account for its data platform.

An Architect created two data domains to produce two data products. The Architect needs a third data domain that will use both of the data products to create an aggregate data product. The read access to the data products will be granted through a separate role.

Based on the Data Mesh principles, how should the third domain be configured to create the aggregate product if it has been granted the two read roles?

A.

Use secondary roles for all users.

B.

Create a hierarchy between the two read roles.

C.

Request a technical ETL user with the sysadmin role.

D.

Request that the two data domains share data using the Data Exchange.

Why does a conditional multi-table insert option support the Data Vault data model?

A.

Data can be inserted in parallel to hubs and satellites using surrogate keys.

B.

Data can be inserted in parallel to dimensions and facts using surrogate keys.

C.

Data can be inserted in sequence to hubs and satellites using surrogate keys.

D.

Data can be inserted in sequence to dimensions and facts using surrogate keys.

An Architect is designing partitioned external tables for a Snowflake data lake. The data lake size may grow over time, and partition definitions may need to change in the future.

How can these requirements be met?

A.

Use the PARTITION BY (

B.

Use partition_type = USER_SPECIFIED when creating the external table.

C.

Set METADATA$EXTERNAL_TABLE_PARTITION = MANUAL.

D.

Alter the table using ADD_PARTITION_COLUMN before defining a new partition column.

What are some of the characteristics of result set caches? (Choose three.)

A.

Time Travel queries can be executed against the result set cache.

B.

Snowflake persists the data results for 24 hours.

C.

Each time persisted results for a query are used, a 24-hour retention period is reset.

D.

The data stored in the result cache will contribute to storage costs.

E.

The retention period can be reset for a maximum of 31 days.

F.

The result set cache is not shared between warehouses.

In Snowflake, the characteristics of result set caches include persistence of data results for 24 hours (B), each use of persisted results resets the 24-hour retention period (C), and result set caches are not shared between different warehouses (F). The result set cache is specifically designed to avoid repeated execution of the same query within this timeframe, reducing computational overhead and speeding up query responses. These caches do not contribute to storage costs, and their retention period cannot be extended beyond the default duration nor up to 31 days, as might be misconstrued.

An Architect is designing a solution that will be used to process changed records in an orders table. Newly-inserted orders must be loaded into the f_orders fact table, which will aggregate all the orders by multiple dimensions (time, region, channel, etc.). Existing orders can be updated by the sales department within 30 days after the order creation. In case of an order update, the solution must perform two actions:

1. Update the order in the f_0RDERS fact table.

2. Load the changed order data into the special table ORDER _REPAIRS.

This table is used by the Accounting department once a month. If the order has been changed, the Accounting team needs to know the latest details and perform the necessary actions based on the data in the order_repairs table.

What data processing logic design will be the MOST performant?

A.

Useone stream and one task.

B.

Useone stream and two tasks.

C.

Usetwo streams and one task.

D.

Usetwo streams and two tasks.

Company A would like to share data in Snowflake with Company B. Company B is not on the same cloud platform as Company A.

What is required to allow data sharing between these two companies?

A.

Create a pipeline to write shared data to a cloud storage location in the target cloud provider.

B.

Ensure that all views are persisted, as views cannot be shared across cloud platforms.

C.

Setup data replication to the region and cloud platform where the consumer resides.

D.

Company A and Company B must agree to use a single cloud platform: Data sharing is only possible if the companies share the same cloud provider.

A global company needs to securely share its sales and Inventory data with a vendor using a Snowflake account.

The company has its Snowflake account In the AWS eu-west 2 Europe (London) region. The vendor's Snowflake account Is on the Azure platform in the West Europe region. How should the company's Architect configure the data share?

A.

1. Create a share.2. Add objects to the share.3. Add a consumer account to the share for the vendor to access.

B.

1. Create a share.2. Create a reader account for the vendor to use.3. Add the reader account to the share.

C.

1. Create a new role called db_share.2. Grant the db_share role privileges to read data from the company database and schema.3. Create a user for the vendor.4. Grant the ds_share role to the vendor's users.

D.

1. Promote an existing database in the company's local account to primary.2. Replicate the database to Snowflake on Azure in the West-Europe region.3. Create a share and add objects to the share.4. Add a consumer account to the share for the vendor to access.

Which SQL ALTER command will MAXIMIZE memory and compute resources for a Snowpark stored procedure when executed on the snowpark_opt_wh warehouse?

A.

ALTER WAREHOUSE snowpark_opt_wh SET MAX_CONCURRENCY_LEVEL = 1;

B.

ALTER WAREHOUSE snowpark_opt_wh SET MAX_CONCURRENCY_LEVEL = 2;

C.

ALTER WAREHOUSE snowpark_opt_wh SET MAX_CONCURRENCY_LEVEL = 8;

D.

ALTER WAREHOUSE snowpark_opt_wh SET MAX_CONCURRENCY_LEVEL = 16;

A company is storing large numbers of small JSON files (ranging from 1-4 bytes) that are received from IoT devices and sent to a cloud provider. In any given hour, 100,000 files are added to the cloud provider.

What is the MOST cost-effective way to bring this data into a Snowflake table?

A.

An external table

B.

A pipe

C.

A stream

D.

A copy command at regular intervals

A company’s daily Snowflake workload consists of a huge number of concurrent queries triggered between 9pm and 11pm. At the individual level, these queries are smaller statements that get completed within a short time period.

What configuration can the company’s Architect implement to enhance the performance of this workload? (Choose two.)

A.

Enable a multi-clustered virtual warehouse in maximized mode during the workload duration.

B.

Set the MAX_CONCURRENCY_LEVEL to a higher value than its default value of 8 at the virtual warehouse level.

C.

Increase the size of the virtual warehouse to size X-Large.

D.

Reduce the amount of data that is being processed through this workload.

E.

Set the connection timeout to a higher value than its default.

These two configuration options can enhance the performance of the workload that consists of a huge number of concurrent queries that are smaller and faster.

Enabling a multi-clustered virtual warehouse in maximized mode allows the warehouse to scale out automatically by adding more clusters as soon as the current cluster is fully loaded, regardless of the number of queries in the queue. This can improve the concurrency and throughput of the workload by minimizing or preventing queuing. The maximized mode is suitable for workloads that require high performance and low latency, and are less sensitive to credit consumption1.

Setting the MAX_CONCURRENCY_LEVEL to a higher value than its default value of 8 at the virtual warehouse level allows the warehouse to run more queries concurrently on each cluster. This can improve the utilization and efficiency of the warehouse resources, especially for smaller and faster queries that do not require a lot of processing power. The MAX_CONCURRENCY_LEVEL parameter can be set when creating or modifying a warehouse, and it can be changed at any time2.

Snowflake Documentation: Scaling Policy for Multi-cluster Warehouses

Snowflake Documentation: MAX_CONCURRENCY_LEVEL

A company is using Snowflake in Azure in the Netherlands. The company analyst team also has data in JSON format that is stored in an Amazon S3 bucket in the AWS Singapore region that the team wants to analyze.

The Architect has been given the following requirements:

1. Provide access to frequently changing data

2. Keep egress costs to a minimum

3. Maintain low latency

How can these requirements be met with the LEAST amount of operational overhead?

A.

Use a materialized view on top of an external table against the S3 bucket in AWS Singapore.

B.

Use an external table against the S3 bucket in AWS Singapore and copy the data into transient tables.

C.

Copy the data between providers from S3 to Azure Blob storage to collocate, then use Snowpipe for data ingestion.

D.

Use AWS Transfer Family to replicate data between the S3 bucket in AWS Singapore and an Azure Netherlands Blob storage, then use an external table against the Blob storage.

An Architect needs to design a Snowflake account and database strategy to store and analyze large amounts of structured and semi-structured data. There are many business units and departments within the company. The requirements are scalability, security, and cost efficiency.

What design should be used?

A.

Create a single Snowflake account and database for all data storage and analysis needs, regardless of data volume or complexity.

B.

Set up separate Snowflake accounts and databases for each department or business unit, to ensure data isolation and security.

C.

Use Snowflake's data lake functionality to store and analyze all data in a central location, without the need for structured schemas or indexes

D.

Use a centralized Snowflake database for core business data, and use separate databases for departmental or project-specific data.

An Architect needs to grant a group of ORDER_ADMIN users the ability to clean old data in an ORDERS table (deleting all records older than 5 years), without granting any privileges on the table. The group’s manager (ORDER_MANAGER) has full DELETE privileges on the table.

How can the ORDER_ADMIN role be enabled to perform this data cleanup, without needing the DELETE privilege held by the ORDER_MANAGER role?

A.

Create a stored procedure that runs with caller’s rights, including the appropriate "> 5 years" business logic, and grant USAGE on this procedure to ORDER_ADMIN. The ORDER_MANAGER role owns the procedure.

B.

Create a stored procedure that can be run using both caller’s and owner’s rights (allowing the user to specify which rights are used during execution), and grant USAGE on this procedure to ORDER_ADMIN. The ORDER_MANAGER role owns the procedure.

C.

Create a stored procedure that runs with owner’s rights, including the appropriate "> 5 years" business logic, and grant USAGE on this procedure to ORDER_ADMIN. The ORDER_MANAGER role owns the procedure.

D.

This scenario would actually not be possible in Snowflake – any user performing a DELETE on a table requires the DELETE privilege to be granted to the role they are using.

When loading data into a table that captures the load time in a column with a default value of either CURRENT_TIME () or CURRENT_TIMESTAMP() what will occur?

A.

All rows loaded using a specific COPY statement will have varying timestamps based on when the rows were inserted.

B.

Any rows loaded using a specific COPY statement will have varying timestamps based on when the rows were read from the source.

C.

Any rows loaded using a specific COPY statement will have varying timestamps based on when the rows were created in the source.

D.

All rows loaded using a specific COPY statement will have the same timestamp value.

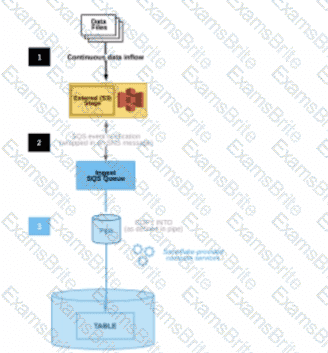

The diagram shows the process flow for Snowpipe auto-ingest with Amazon Simple Notification Service (SNS) with the following steps:

Step 1: Data files are loaded in a stage.

Step 2: An Amazon S3 event notification, published by SNS, informs Snowpipe — by way of Amazon Simple Queue Service (SQS) - that files are ready to load. Snowpipe copies the files into a queue.

Step 3: A Snowflake-provided virtual warehouse loads data from the queued files into the target table based on parameters defined in the specified pipe.

If an AWS Administrator accidentally deletes the SQS subscription to the SNS topic in Step 2, what will happen to the pipe that references the topic to receive event messages from Amazon S3?

A.

The pipe will continue to receive the messages as Snowflake will automatically restore the subscription to the same SNS topic and will recreate the pipe by specifying the same SNS topic name in the pipe definition.

B.

The pipe will no longer be able to receive the messages and the user must wait for 24 hours from the time when the SNS topic subscription was deleted. Pipe recreation is not required as the pipe will reuse the same subscription to the existing SNS topic after 24 hours.

C.

The pipe will continue to receive the messages as Snowflake will automatically restore the subscription by creating a new SNS topic. Snowflake will then recreate the pipe by specifying the new SNS topic name in the pipe definition.

D.

The pipe will no longer be able to receive the messages. To restore the system immediately, the user needs to manually create a new SNS topic with a different name and then recreate the pipe by specifying the new SNS topic name in the pipe definition.

An Architect needs to meet a company requirement to ingest files from the company's AWS storage accounts into the company's Snowflake Google Cloud Platform (GCP) account. How can the ingestion of these files into the company's Snowflake account be initiated? (Select TWO).

A.

Configure the client application to call the Snowpipe REST endpoint when new files have arrived in Amazon S3 storage.

B.

Configure the client application to call the Snowpipe REST endpoint when new files have arrived in Amazon S3 Glacier storage.

C.

Create an AWS Lambda function to call the Snowpipe REST endpoint when new files have arrived in Amazon S3 storage.

D.

Configure AWS Simple Notification Service (SNS) to notify Snowpipe when new files have arrived in Amazon S3 storage.

E.

Configure the client application to issue a COPY INTO